Combining pretrained CNN feature extractors to enhance clustering of complex natural images

Paper and Code

Jan 07, 2021

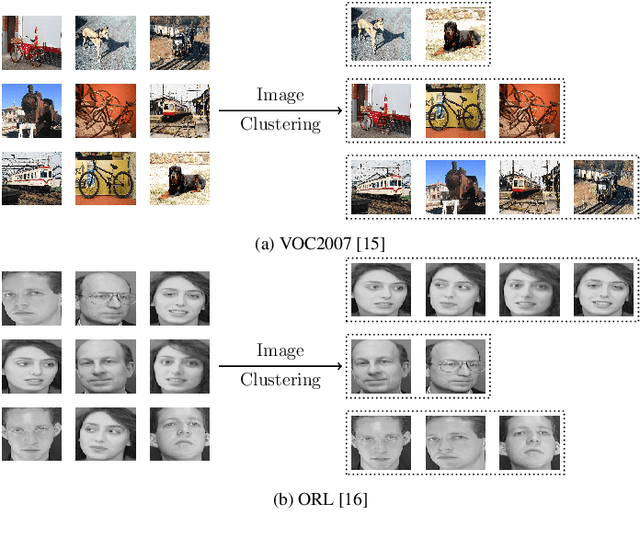

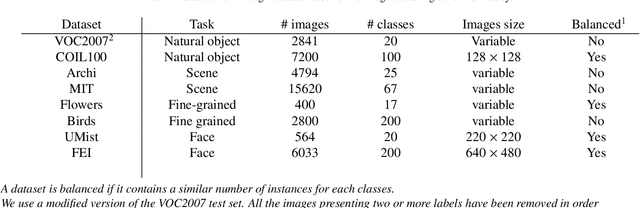

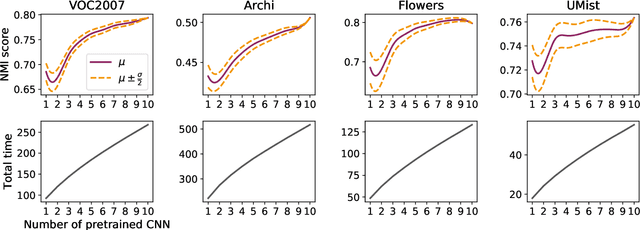

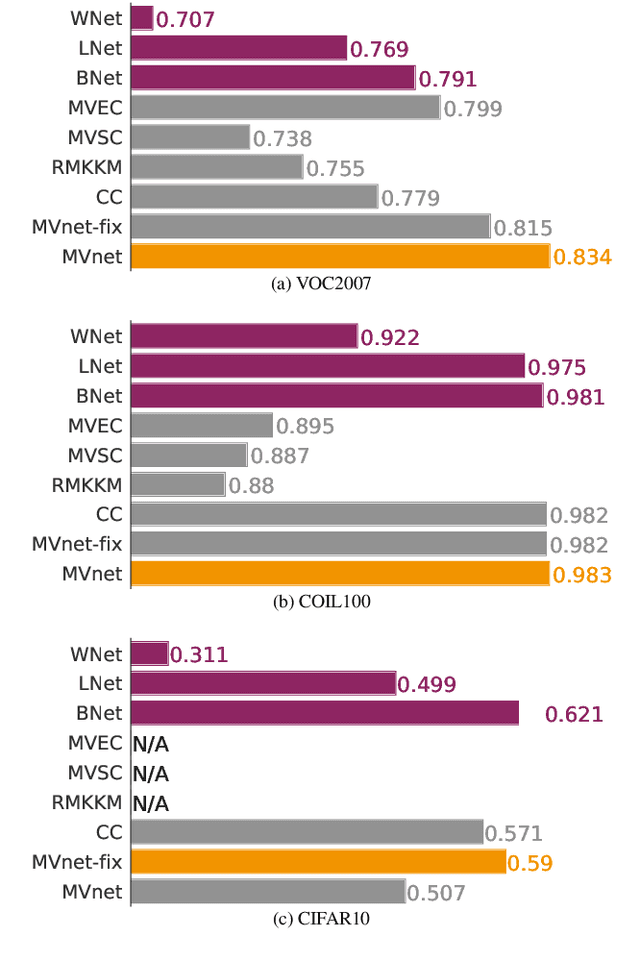

Recently, a common starting point for solving complex unsupervised image classification tasks is to use generic features, extracted with deep Convolutional Neural Networks (CNN) pretrained on a large and versatile dataset (ImageNet). However, in most research, the CNN architecture for feature extraction is chosen arbitrarily, without justification. This paper aims at providing insight on the use of pretrained CNN features for image clustering (IC). First, extensive experiments are conducted and show that, for a given dataset, the choice of the CNN architecture for feature extraction has a huge impact on the final clustering. These experiments also demonstrate that proper extractor selection for a given IC task is difficult. To solve this issue, we propose to rephrase the IC problem as a multi-view clustering (MVC) problem that considers features extracted from different architectures as different "views" of the same data. This approach is based on the assumption that information contained in the different CNN may be complementary, even when pretrained on the same data. We then propose a multi-input neural network architecture that is trained end-to-end to solve the MVC problem effectively. This approach is tested on nine natural image datasets, and produces state-of-the-art results for IC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge