Color2Style: Real-Time Exemplar-Based Image Colorization with Self-Reference Learning and Deep Feature Modulation

Paper and Code

Jun 16, 2021

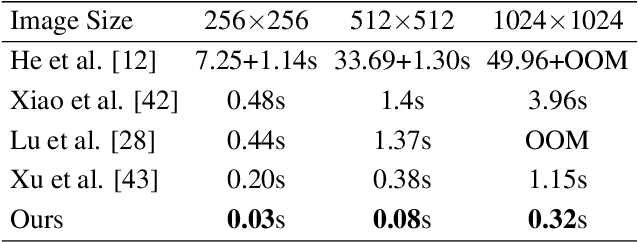

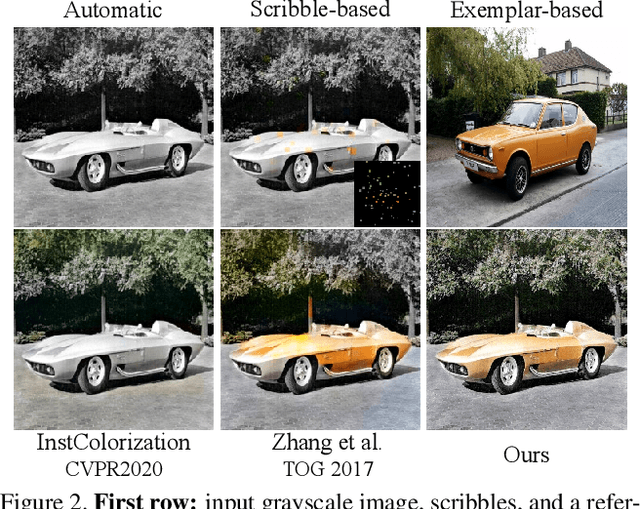

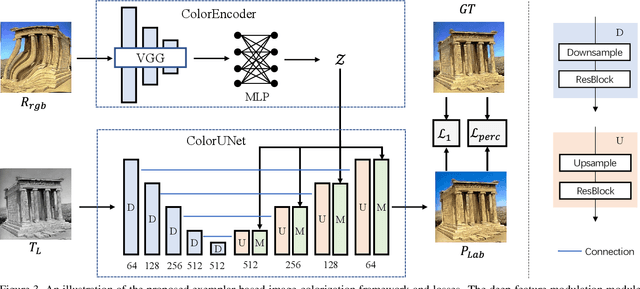

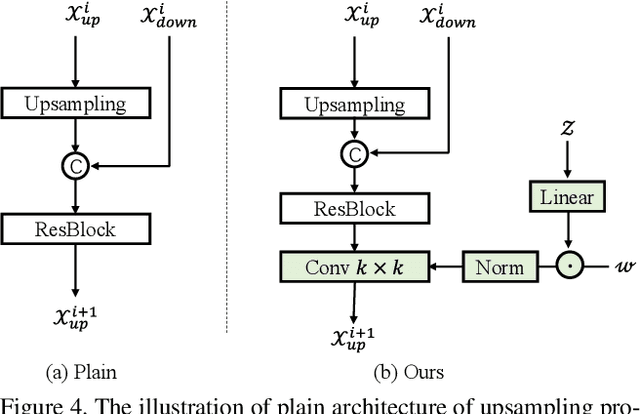

Legacy black-and-white photos are riddled with people's nostalgia and glorious memories of the past. To better relive the elapsed frozen moments, in this paper, we present a deep exemplar-based image colorization approach named Color2Style to resurrect these grayscale image media by filling them with vibrant colors. Generally, for exemplar-based colorization, unsupervised and unpaired training are usually adopted, due to the difficulty of obtaining input and ground truth image pairs. To train an exemplar-based colorization model, current algorithms usually strive to achieve two procedures: i) retrieving a large number of reference images with high similarity in advance, which is inevitably time-consuming and tedious; ii) designing complicated modules to transfer the colors of the reference image to the grayscale image, by calculating and leveraging the deep semantic correspondence between them (e.g., non-local operation). Contrary to the previous methods, we solve and simplify the above two steps in one end-to-end learning procedure. First, we adopt a self-augmented self-reference training scheme, where the reference image is generated by graphical transformations from the original colorful one whereby the training can be formulated in a paired manner. Second, instead of computing complex and inexplicable correspondence maps, our method exploits a simple yet effective deep feature modulation (DFM) module, which injects the color embeddings extracted from the reference image into the deep representations of the input grayscale image. Such design is much more lightweight and intelligible, achieving appealing performance with real-time processing speed. Moreover, our model does not require multifarious loss functions and regularization terms like existing methods, but only two widely used loss functions. Codes and models will be available at https://github.com/zhaohengyuan1/Color2Style.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge