Collaborative Recognition of Feasible region with Aerial and Ground Robots through DPCN

Paper and Code

Mar 01, 2021

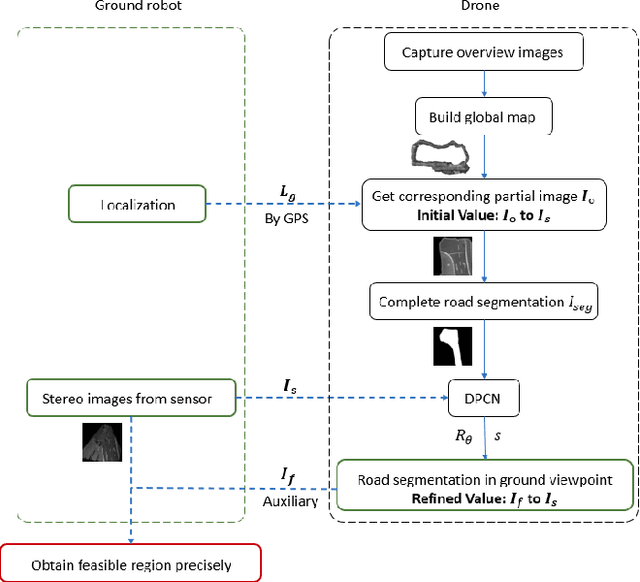

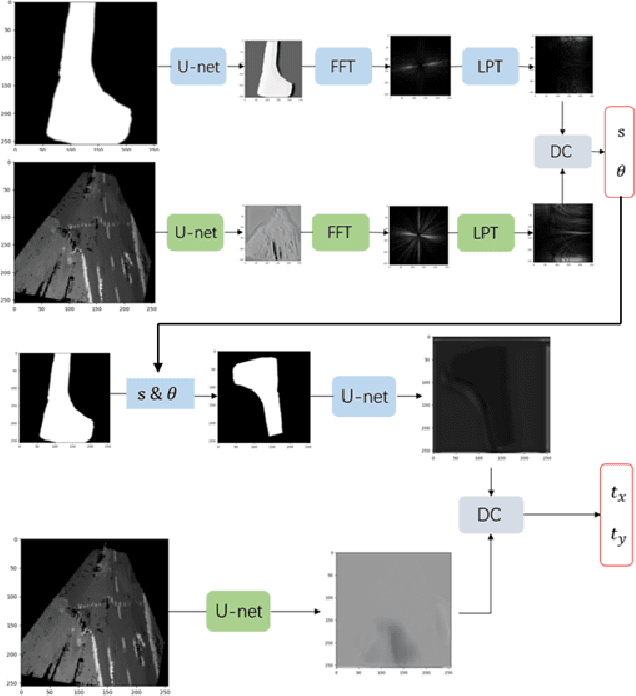

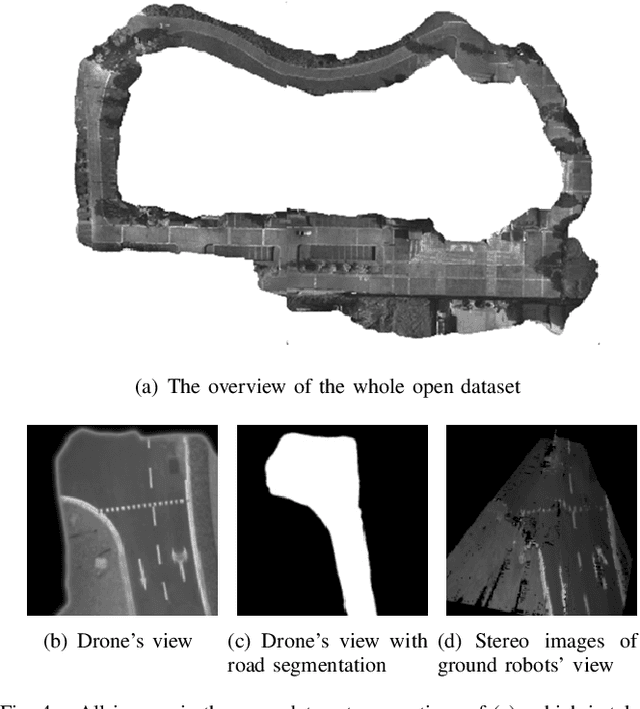

Ground robots always get collision in that only if they get close to the obstacles, can they sense the danger and take actions, which is usually too late to avoid the crash, causing severe damage to the robots. To address this issue, we present collaboration of aerial and ground robots in recognition of feasible region. Taking the aerial robots' advantages of having large scale variance of view points of the same route which the ground robots is on, the collaboration work provides global information of road segmentation for the ground robot, thus enabling it to obtain feasible region and adjust its pose ahead of time. Under normal circumstance, the transformation between these two devices can be obtained by GPS yet with much error, directly causing inferior influence on recognition of feasible region. Thereby, we utilize the state-of-the-art research achievements in matching heterogeneous sensor measurements called deep phase correlation network(DPCN), which has excellent performance on heterogeneous mapping, to refine the transformation. The network is light-weighted and promising for better generalization. We use Aero-Ground dataset which consists of heterogeneous sensor images and aerial road segmentation images. The results show that our collaborative system has great accuracy, speed and stability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge