Co-design of Embodied Neural Intelligence via Constrained Evolution

Paper and Code

May 21, 2022

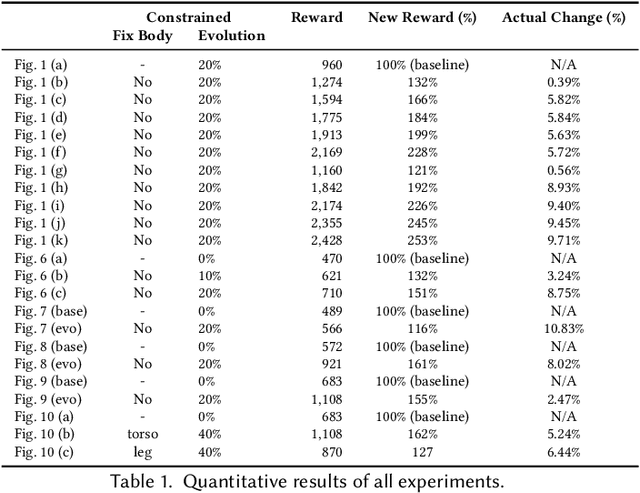

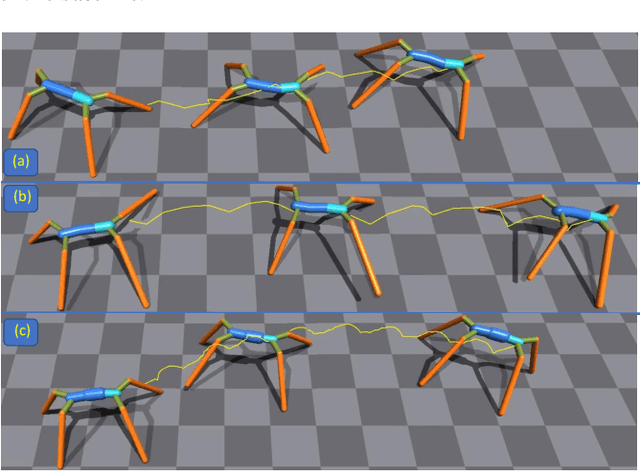

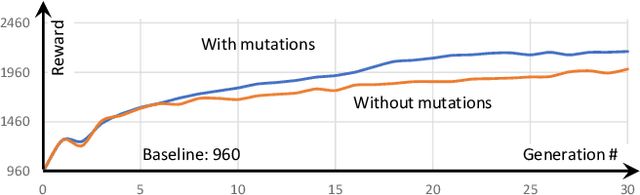

We introduce a novel co-design method for autonomous moving agents' shape attributes and locomotion by combining deep reinforcement learning and evolution with user control. Our main inspiration comes from evolution, which has led to wide variability and adaptation in Nature and has the potential to significantly improve design and behavior simultaneously. Our method takes an input agent with optional simple constraints such as leg parts that should not evolve or allowed ranges of changes. It uses physics-based simulation to determine its locomotion and finds a behavior policy for the input design, later used as a baseline for comparison. The agent is then randomly modified within the allowed ranges creating a new generation of several hundred agents. The generation is trained by transferring the previous policy, which significantly speeds up the training. The best-performing agents are selected, and a new generation is formed using their crossover and mutations. The next generations are then trained until satisfactory results are reached. We show a wide variety of evolved agents, and our results show that even with only 10% of changes, the overall performance of the evolved agents improves 50%. If more significant changes to the initial design are allowed, our experiments' performance improves even more to 150%. Contrary to related work, our co-design works on a single GPU and provides satisfactory results by training thousands of agents within one hour.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge