CNT : A new Algorithm for Leveraging Top-Down Feedback

Paper and Code

Oct 18, 2022

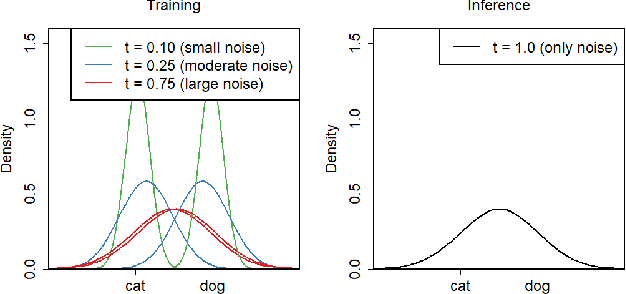

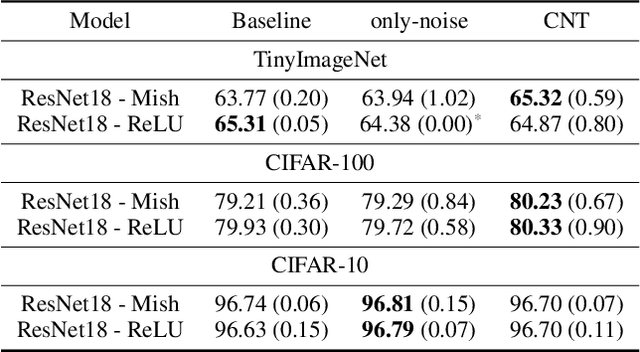

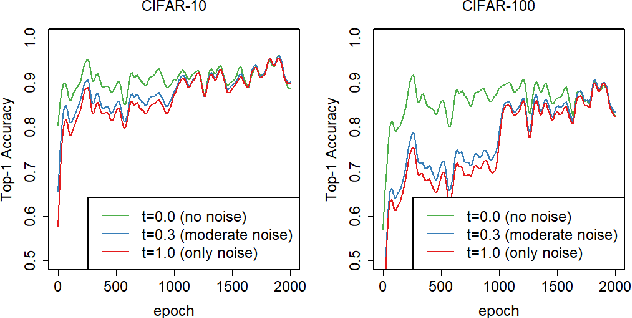

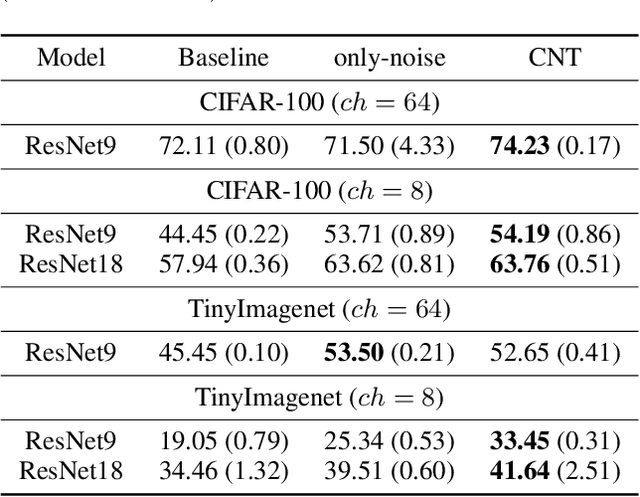

We propose a novel regularizer for supervised learning called Conditioning on Noisy Targets (CNT). This approach consists in conditioning the model on a noisy version of the target(s) (e.g., actions in imitation learning or labels in classification) at a random noise level (from small to large noise). At inference time, since we do not know the target, we run the network with only noise in place of the noisy target. CNT provides hints through the noisy label (with less noise, we can more easily infer the true target). This give two main benefits: 1) the top-down feedback allows the model to focus on simpler and more digestible sub-problems and 2) rather than learning to solve the task from scratch, the model will first learn to master easy examples (with less noise), while slowly progressing toward harder examples (with more noise).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge