Chinese Grammatical Correction Using BERT-based Pre-trained Model

Paper and Code

Nov 04, 2020

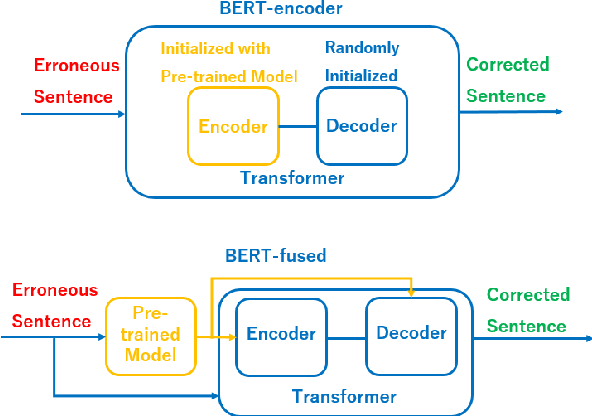

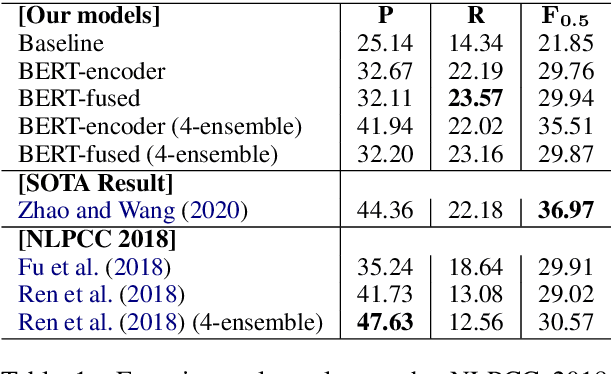

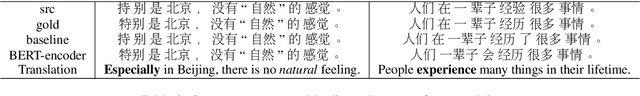

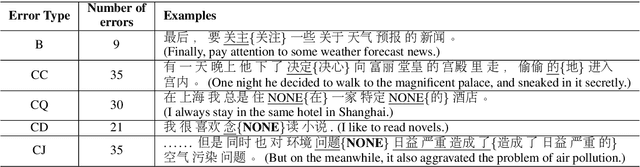

In recent years, pre-trained models have been extensively studied, and several downstream tasks have benefited from their utilization. In this study, we verify the effectiveness of two methods that incorporate a BERT-based pre-trained model developed by Cui et al. (2020) into an encoder-decoder model on Chinese grammatical error correction tasks. We also analyze the error type and conclude that sentence-level errors are yet to be addressed.

* 6 pages; AACL-IJCNLP 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge