Change-point Detection Methods for Body-Worn Video

Paper and Code

Oct 20, 2016

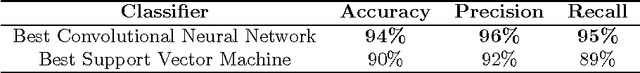

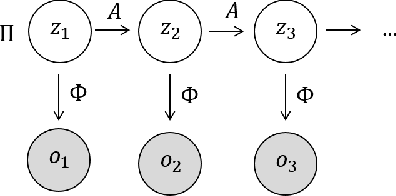

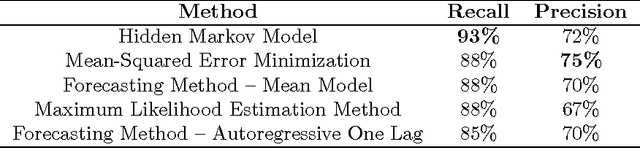

Body-worn video (BWV) cameras are increasingly utilized by police departments to provide a record of police-public interactions. However, large-scale BWV deployment produces terabytes of data per week, necessitating the development of effective computational methods to identify salient changes in video. In work carried out at the 2016 RIPS program at IPAM, UCLA, we present a novel two-stage framework for video change-point detection. First, we employ state-of-the-art machine learning methods including convolutional neural networks and support vector machines for scene classification. We then develop and compare change-point detection algorithms utilizing mean squared-error minimization, forecasting methods, hidden Markov models, and maximum likelihood estimation to identify noteworthy changes. We test our framework on detection of vehicle exits and entrances in a BWV data set provided by the Los Angeles Police Department and achieve over 90% recall and nearly 70% precision -- demonstrating robustness to rapid scene changes, extreme luminance differences, and frequent camera occlusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge