CAT: Caution Aware Transfer in Reinforcement Learning via Distributional Risk

Paper and Code

Aug 16, 2024

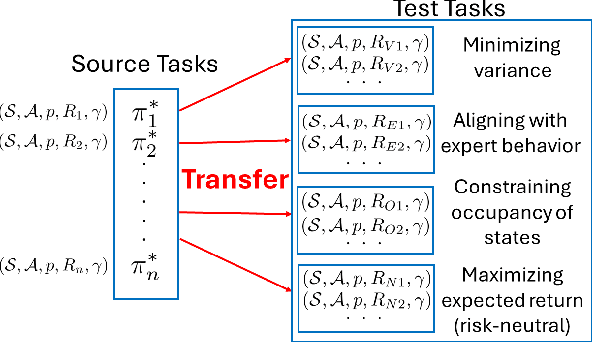

Transfer learning in reinforcement learning (RL) has become a pivotal strategy for improving data efficiency in new, unseen tasks by utilizing knowledge from previously learned tasks. This approach is especially beneficial in real-world deployment scenarios where computational resources are constrained and agents must adapt rapidly to novel environments. However, current state-of-the-art methods often fall short in ensuring safety during the transfer process, particularly when unforeseen risks emerge in the deployment phase. In this work, we address these limitations by introducing a novel Caution-Aware Transfer Learning (CAT) framework. Unlike traditional approaches that limit risk considerations to mean-variance, we define "caution" as a more generalized and comprehensive notion of risk. Our core innovation lies in optimizing a weighted sum of reward return and caution-based on state-action occupancy measures-during the transfer process, allowing for a rich representation of diverse risk factors. To the best of our knowledge, this is the first work to explore the optimization of such a generalized risk notion within the context of transfer RL. Our contributions are threefold: (1) We propose a Caution-Aware Transfer (CAT) framework that evaluates source policies within the test environment and constructs a new policy that balances reward maximization and caution. (2) We derive theoretical sub-optimality bounds for our method, providing rigorous guarantees of its efficacy. (3) We empirically validate CAT, demonstrating that it consistently outperforms existing methods by delivering safer policies under varying risk conditions in the test tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge