Boosting Simple Learners

Paper and Code

Jan 31, 2020

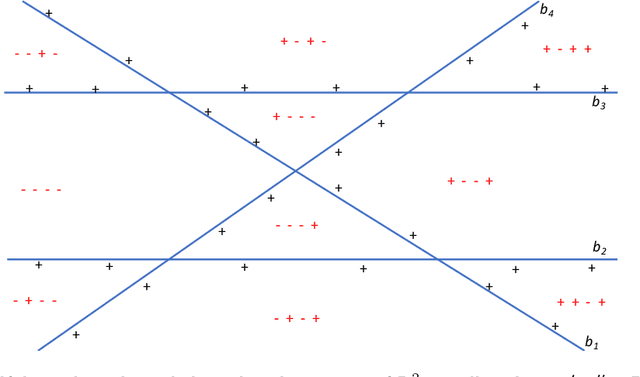

We consider boosting algorithms under the restriction that the weak learners come from a class of bounded VC-dimension. In this setting, we focus on two main questions: (i) \underline{Oracle Complexity:} we show that the restriction on the complexity of the weak learner significantly improves the number of calls to the weak learner. We describe a boosting procedure which makes only~$\tilde O(1/\gamma)$ calls to the weak learner, where $\gamma$ denotes the weak learner's advantage. This circumvents a lower bound of $\Omega(1/\gamma^2)$ due to Freund and Schapire ('95, '12) for the general case. Unlike previous boosting algorithms which aggregate the weak hypotheses by majority votes, our method use more complex aggregation rules, and we show this to be necessary. (ii) \underline{Expressivity:} we consider the question of what can be learned by boosting weak hypotheses of bounded VC-dimension? Towards this end we identify a combinatorial-geometric parameter called the $\gamma$-VC dimension which quantifies the expressivity of a class of weak hypotheses when used as part of a boosting procedure. We explore the limits of the $\gamma$-VC dimension and compute it for well-studied classes such as halfspaces and decision stumps. Along the way, we establish and exploit connections with {\it Discrepancy theory}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge