BoMb-OT: On Batch of Mini-batches Optimal Transport

Paper and Code

Feb 11, 2021

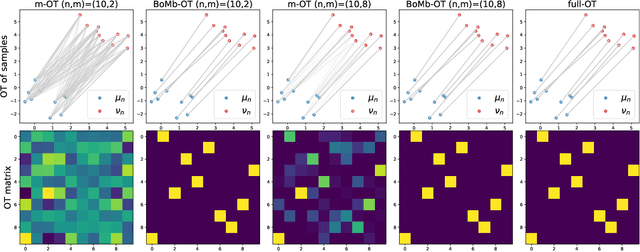

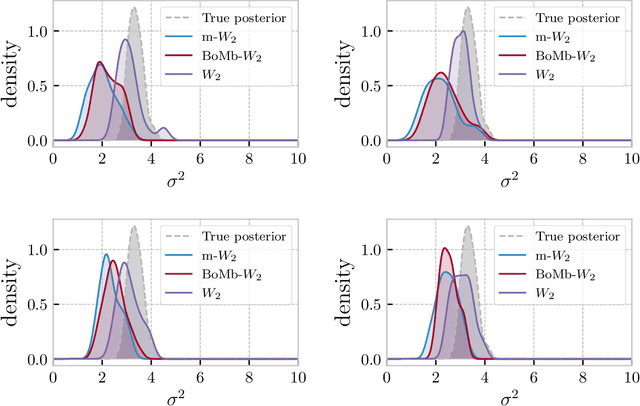

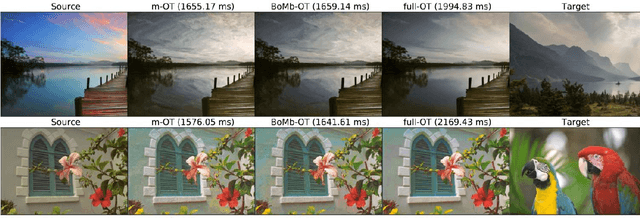

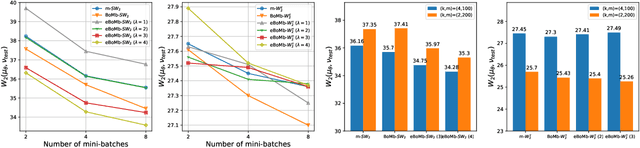

Mini-batch optimal transport (m-OT) has been successfully used in practical applications that involve probability measures with intractable density, or probability measures with a very high number of supports. The m-OT solves several sparser optimal transport problems and then returns the average of their costs and transportation plans. Despite its scalability advantage, m-OT is not a proper metric between probability measures since it does not satisfy the identity property. To address this problem, we propose a novel mini-batching scheme for optimal transport, named Batch of Mini-batches Optimal Transport (BoMb-OT), that can be formulated as a well-defined distance on the space of probability measures. Furthermore, we show that the m-OT is a limit of the entropic regularized version of the proposed BoMb-OT when the regularized parameter goes to infinity. We carry out extensive experiments to show that the new mini-batching scheme can estimate a better transportation plan between two original measures than m-OT. It leads to a favorable performance of BoMb-OT in the matching and color transfer tasks. Furthermore, we observe that BoMb-OT also provides a better objective loss than m-OT for doing approximate Bayesian computation, estimating parameters of interest in parametric generative models, and learning non-parametric generative models with gradient flow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge