Birds of a Feather Flock Together: A Close Look at Cooperation Emergence via Multi-Agent RL

Paper and Code

Apr 23, 2021

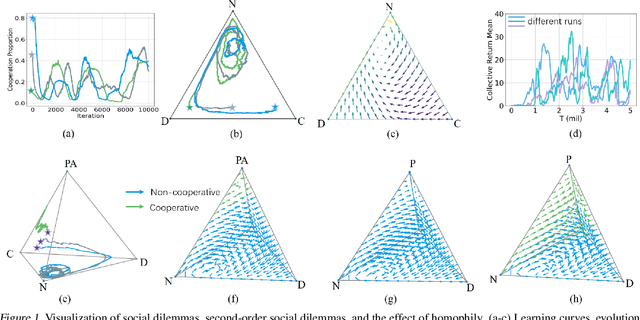

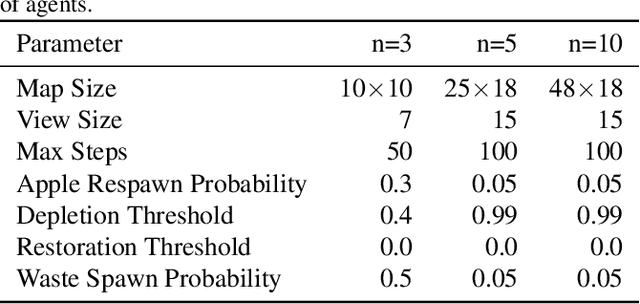

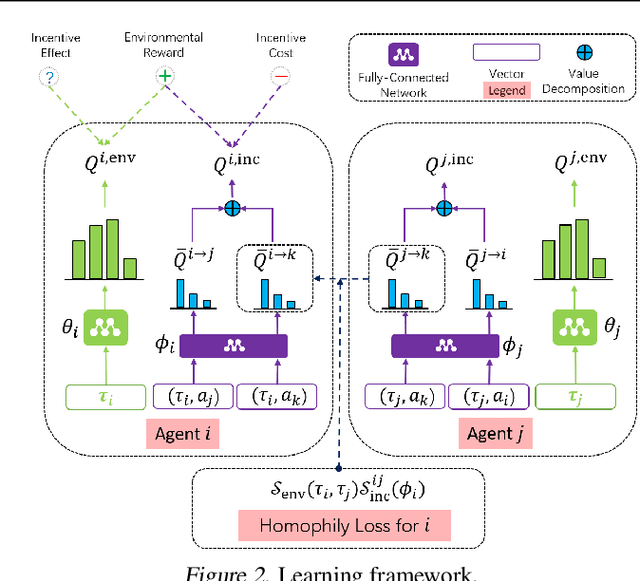

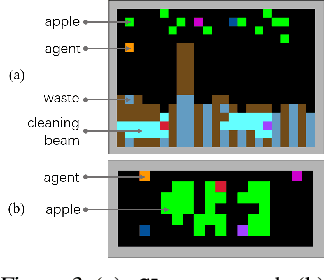

How cooperation emerges is a long-standing and interdisciplinary problem. Game-theoretical studies on social dilemmas reveal that altruistic incentives are critical to the emergence of cooperation but their analyses are limited to stateless games. For more realistic scenarios, multi-agent reinforcement learning has been used to study sequential social dilemmas (SSDs). Recent works show that learning to incentivize other agents can promote cooperation in SSDs. However, with these incentivizing mechanisms, the team cooperation level does not converge and regularly oscillates between cooperation and defection during learning. We show that a second-order social dilemma resulting from these incentive mechanisms is the main reason for such fragile cooperation. We analyze the dynamics of this second-order social dilemma and find that a typical tendency of humans, called homophily, can solve the problem. We propose a novel learning framework to encourage incentive homophily and show that it achieves stable cooperation in both public goods dilemma and tragedy of the commons dilemma.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge