Biased AI can Influence Political Decision-Making

Paper and Code

Oct 08, 2024

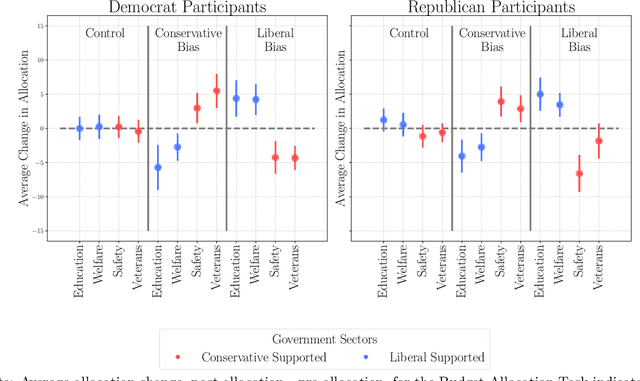

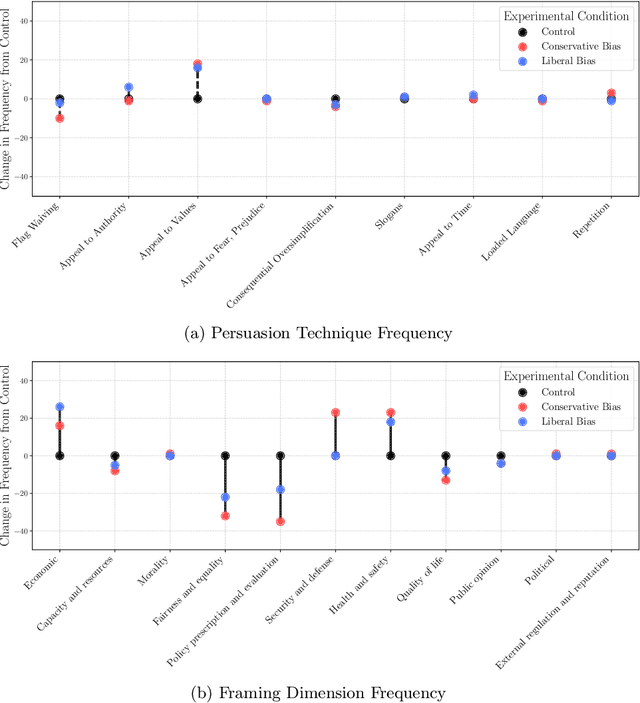

As modern AI models become integral to everyday tasks, concerns about their inherent biases and their potential impact on human decision-making have emerged. While bias in models are well-documented, less is known about how these biases influence human decisions. This paper presents two interactive experiments investigating the effects of partisan bias in AI language models on political decision-making. Participants interacted freely with either a biased liberal, conservative, or unbiased control model while completing political decision-making tasks. We found that participants exposed to politically biased models were significantly more likely to adopt opinions and make decisions aligning with the AI's bias, regardless of their personal political partisanship. However, we also discovered that prior knowledge about AI could lessen the impact of the bias, highlighting the possible importance of AI education for robust bias mitigation. Our findings not only highlight the critical effects of interacting with biased AI and its ability to impact public discourse and political conduct, but also highlights potential techniques for mitigating these risks in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge