Beyond a Video Frame Interpolator: A Space Decoupled Learning Approach to Continuous Image Transition

Paper and Code

Mar 18, 2022

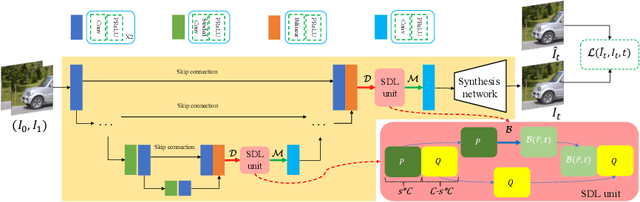

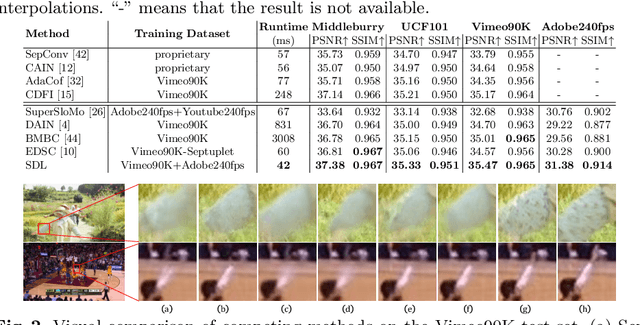

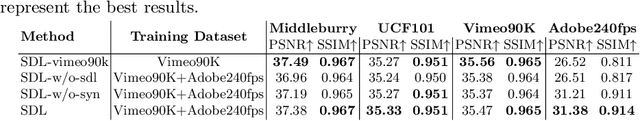

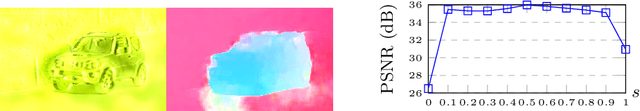

Video frame interpolation (VFI) aims to improve the temporal resolution of a video sequence. Most of the existing deep learning based VFI methods adopt off-the-shelf optical flow algorithms to estimate the bidirectional flows and interpolate the missing frames accordingly. Though having achieved a great success, these methods require much human experience to tune the bidirectional flows and often generate unpleasant results when the estimated flows are not accurate. In this work, we rethink the VFI problem and formulate it as a continuous image transition (CIT) task, whose key issue is to transition an image from one space to another space continuously. More specifically, we learn to implicitly decouple the images into a translatable flow space and a non-translatable feature space. The former depicts the translatable states between the given images, while the later aims to reconstruct the intermediate features that cannot be directly translated. In this way, we can easily perform image interpolation in the flow space and intermediate image synthesis in the feature space, obtaining a CIT model. The proposed space decoupled learning (SDL) approach is simple to implement, while it provides an effective framework to a variety of CIT problems beyond VFI, such as style transfer and image morphing. Our extensive experiments on a variety of CIT tasks demonstrate the superiority of SDL to existing methods. The source code and models can be found at \url{https://github.com/yangxy/SDL}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge