BEL: A Bag Embedding Loss for Transformer enhances Multiple Instance Whole Slide Image Classification

Paper and Code

Mar 02, 2023

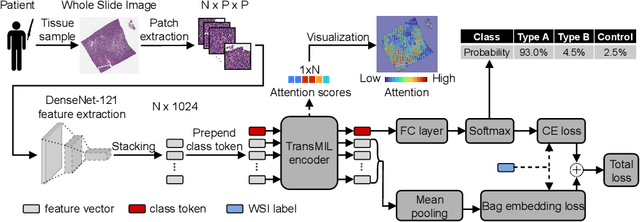

Multiple Instance Learning (MIL) has become the predominant approach for classification tasks on gigapixel histopathology whole slide images (WSIs). Within the MIL framework, single WSIs (bags) are decomposed into patches (instances), with only WSI-level annotation available. Recent MIL approaches produce highly informative bag level representations by utilizing the transformer architecture's ability to model the dependencies between instances. However, when applied to high magnification datasets, problems emerge due to the large number of instances and the weak supervisory learning signal. To address this problem, we propose to additionally train transformers with a novel Bag Embedding Loss (BEL). BEL forces the model to learn a discriminative bag-level representation by minimizing the distance between bag embeddings of the same class and maximizing the distance between different classes. We evaluate BEL with the Transformer architecture TransMIL on two publicly available histopathology datasets, BRACS and CAMELYON17. We show that with BEL, TransMIL outperforms the baseline models on both datasets, thus contributing to the clinically highly relevant AI-based tumor classification of histological patient material.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge