Bayes-Probe: Distribution-Guided Sampling for Prediction Level Sets

Paper and Code

Feb 19, 2020

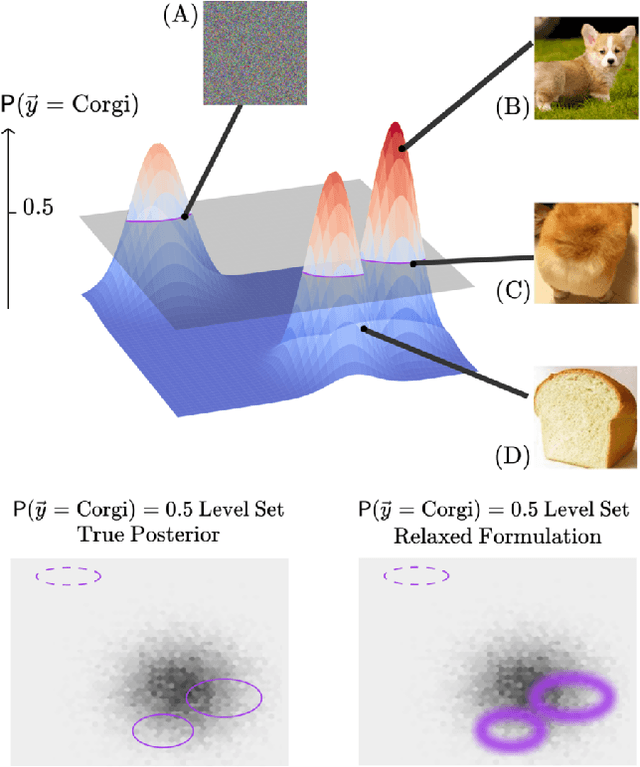

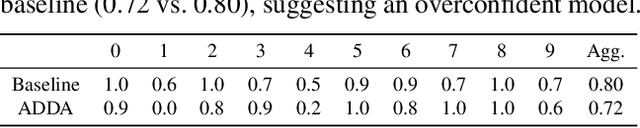

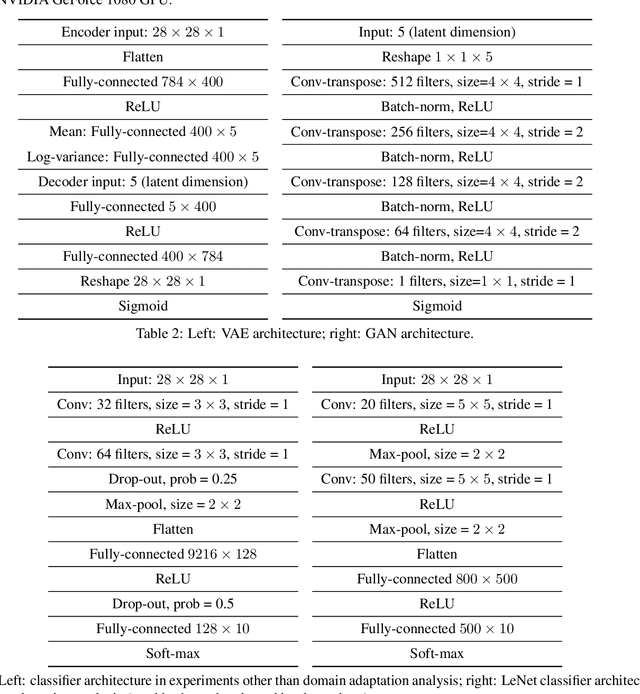

Building machine learning models requires a suite of tools for interpretation, understanding, and debugging. Many existing methods have been proposed, but it can still be difficult to probe for examples which communicate model behaviour. We introduce Bayes-Probe, a model inspection method for analyzing neural networks by generating distribution-conforming examples of known prediction confidence. By selecting appropriate distributions and confidence prediction values, Bayes-Probe can be used to synthesize ambivalent predictions, uncover in-distribution adversarial examples, and understand novel-class extrapolation and domain adaptation behaviours. Bayes-Probe is model agnostic, requiring only a data generator and classifier prediction. We use Bayes-Probe to analyze models trained on both procedurally-generated data (CLEVR) and organic data (MNIST and Fashion-MNIST). Code is available at https://github.com/serenabooth/Bayes-Probe.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge