Backdoor Attacks against Transfer Learning with Pre-trained Deep Learning Models

Paper and Code

Jan 10, 2020

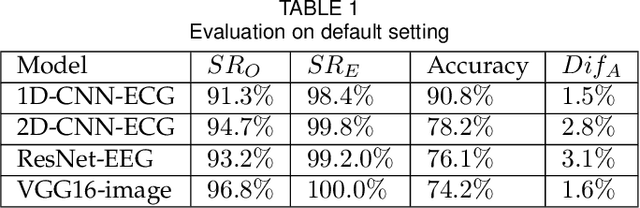

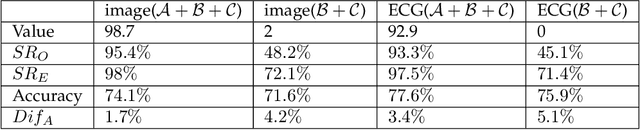

Transfer learning, that transfer the learned knowledge of pre-trained Teacher models over large datasets via fine-tuning, provides an effective solution for feasibly and fast customize accurate Student models. Many pre-trained Teacher models are publicly available and maintained by public platforms, increasing their vulnerability to backdoor attacks. In this paper, we demonstrate a backdoor threat to transfer learning tasks on both image and time-series data leveraging the knowledge of publicly accessible Teacher models, aimed at defeating three commonly-adopted defenses: pruning-based, retraining-based and input pre-processing-based defenses. Specifically, (A) ranking-based selection mechanism to speed up the backdoor trigger generation and perturbation process while defeating pruning-based and/or retraining-based defenses. (B) autoencoder-powered trigger generation is proposed to produce a robust trigger that can defeat the input pre-processing-based defense, while guaranteeing that selected neuron(s) can be significantly activated. (C) defense-aware retraining to generate the manipulated model using reverse-engineered model inputs. We use the real-world image and bioelectric signal analytics applications to demonstrate the power of our attack and conduct a comprehensive empirical analysis of the possible factors that affect the attack. The efficiency/effectiveness and feasibility/easiness of such attacks are validated by empirically evaluating the state-of-the-art image, Electroencephalography (EEG) and Electrocardiography (ECG) learning systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge