Autonomous Path Planning for Intercostal Robotic Ultrasound Imaging Using Reinforcement Learning

Paper and Code

Apr 15, 2024

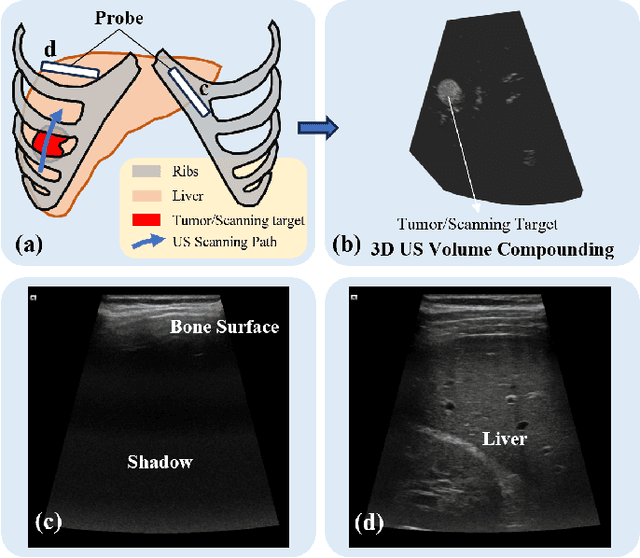

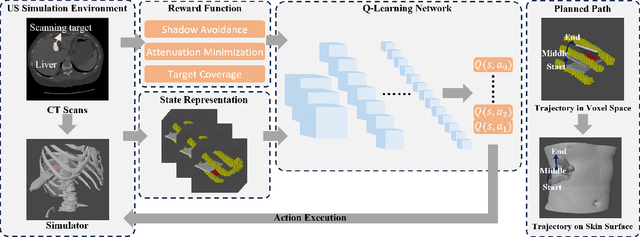

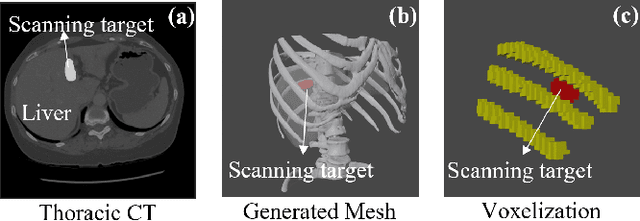

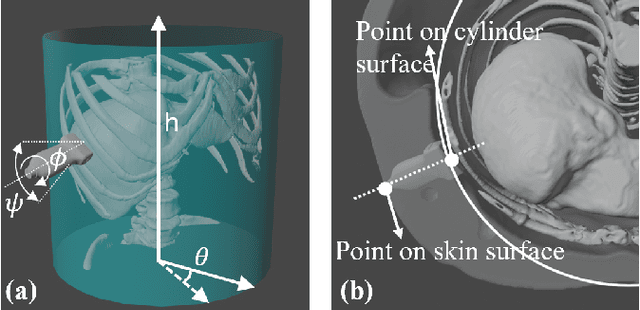

Ultrasound (US) has been widely used in daily clinical practice for screening internal organs and guiding interventions. However, due to the acoustic shadow cast by the subcutaneous rib cage, the US examination for thoracic application is still challenging. To fully cover and reconstruct the region of interest in US for diagnosis, an intercostal scanning path is necessary. To tackle this challenge, we present a reinforcement learning (RL) approach for planning scanning paths between ribs to monitor changes in lesions on internal organs, such as the liver and heart, which are covered by rib cages. Structured anatomical information of the human skeleton is crucial for planning these intercostal paths. To obtain such anatomical insight, an RL agent is trained in a virtual environment constructed using computational tomography (CT) templates with randomly initialized tumors of various shapes and locations. In addition, task-specific state representation and reward functions are introduced to ensure the convergence of the training process while minimizing the effects of acoustic attenuation and shadows during scanning. To validate the effectiveness of the proposed approach, experiments have been carried out on unseen CTs with randomly defined single or multiple scanning targets. The results demonstrate the efficiency of the proposed RL framework in planning non-shadowed US scanning trajectories in areas with limited acoustic access.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge