Automatic Multi-Class Cardiovascular Magnetic Resonance Image Quality Assessment using Unsupervised Domain Adaptation in Spatial and Frequency Domains

Paper and Code

Dec 13, 2021

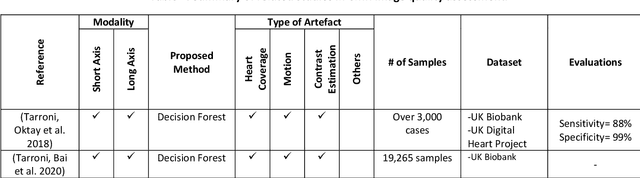

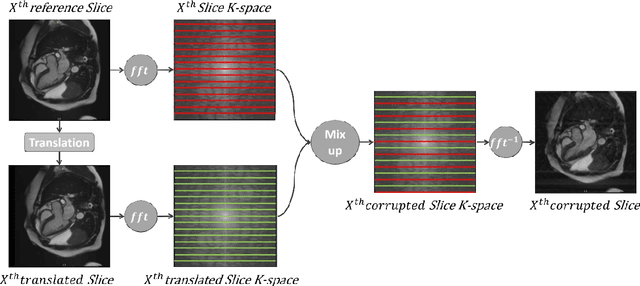

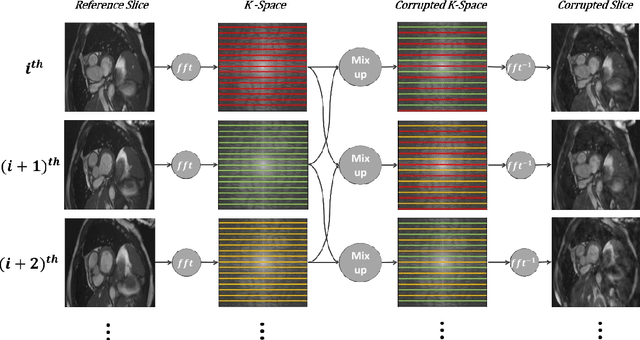

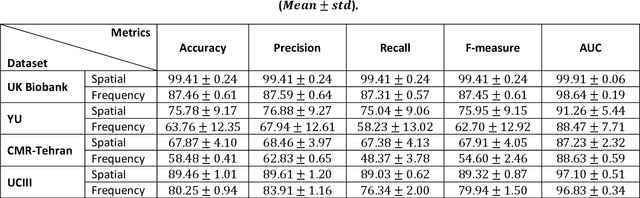

Population imaging studies rely upon good quality medical imagery before downstream image quantification. This study provides an automated approach to assess image quality from cardiovascular magnetic resonance (CMR) imaging at scale. We identify four common CMR imaging artefacts, including respiratory motion, cardiac motion, Gibbs ringing, and aliasing. The model can deal with images acquired in different views, including two, three, and four-chamber long-axis and short-axis cine CMR images. Two deep learning-based models in spatial and frequency domains are proposed. Besides recognising these artefacts, the proposed models are suitable to the common challenges of not having access to data labels. An unsupervised domain adaptation method and a Fourier-based convolutional neural network are proposed to overcome these challenges. We show that the proposed models reliably allow for CMR image quality assessment. The accuracies obtained for the spatial model in supervised and weakly supervised learning are 99.41+0.24 and 96.37+0.66 for the UK Biobank dataset, respectively. Using unsupervised domain adaptation can somewhat overcome the challenge of not having access to the data labels. The maximum achieved domain gap coverage in unsupervised domain adaptation is 16.86%. Domain adaptation can significantly improve a 5-class classification task and deal with considerable domain shift without data labels. Increasing the speed of training and testing can be achieved with the proposed model in the frequency domain. The frequency-domain model can achieve the same accuracy yet 1.548 times faster than the spatial model. This model can also be used directly on k-space data, and there is no need for image reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge