Attention Mechanisms for Object Recognition with Event-Based Cameras

Paper and Code

Jul 25, 2018

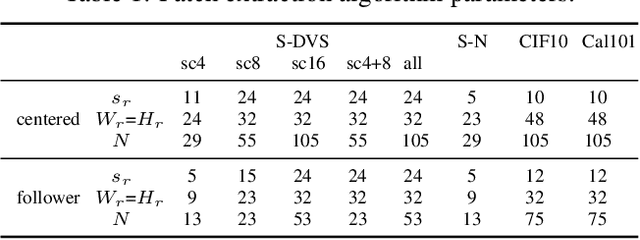

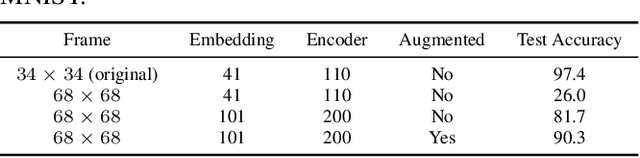

Event-based cameras are neuromorphic sensors capable of efficiently encoding visual information in the form of sparse sequences of events. Being biologically inspired, they are commonly used to exploit some of the computational and power consumption benefits of biological vision. In this paper we focus on a specific feature of vision: visual attention. We propose two attentive models for event based vision. An algorithm that tracks events activity within the field of view to locate regions of interest and a fully-differentiable attention procedure based on DRAW neural model. We highlight the strengths and weaknesses of the proposed methods on two datasets, the Shifted N-MNIST and Shifted MNIST-DVS collections, using the Phased LSTM recognition network as a baseline reference model obtaining improvements in terms of both translation and scale invariance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge