Attention Deep Model with Multi-Scale Deep Supervision for Person Re-Identification

Paper and Code

Nov 27, 2019

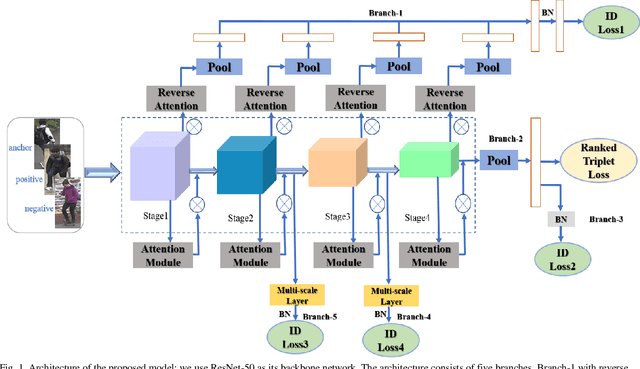

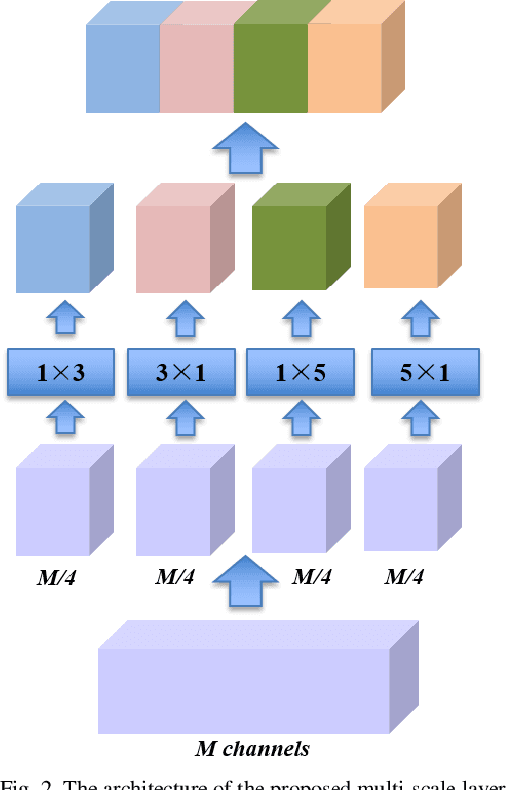

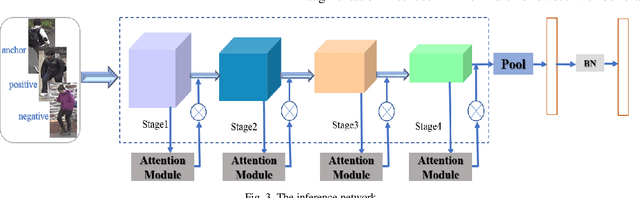

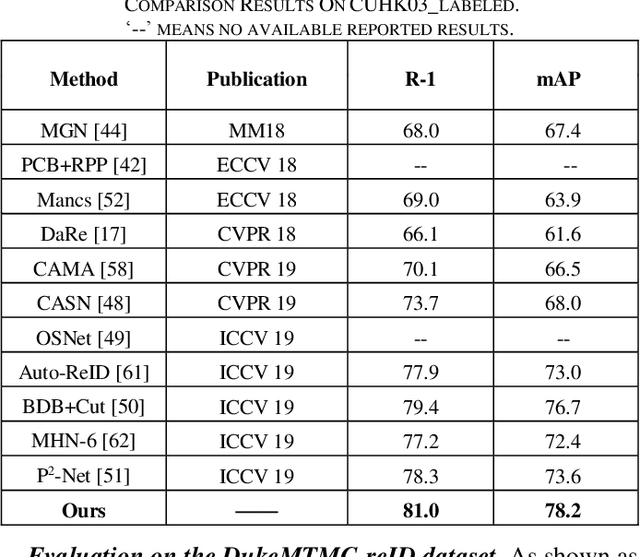

In recent years, person re-identification (PReID) has become a hot topic in computer vision duo to it is an important part in intelligent surveillance. Many state-of-the-art PReID methods are attention-based or multi-scale feature learning deep models. However, introducing attention mechanism may lead to some important feature information losing issue. Besides, most of the multi-scale models embedding the multi-scale feature learning block into the feature extraction deep network, which reduces the efficiency of inference network. To address these issue, in this study, we introduce an attention deep architecture with multi-scale deep supervision for PReID. Technically, we contribute a reverse attention block to complement the attention block, and a novel multi-scale layer with deep supervision operator for training the backbone network. The proposed block and operator are only used for training, and discard in test phase. Experiments have been performed on Market-1501, DukeMTMC-reID and CUHK03 datasets. All the experiment results show that the proposed model significantly outperforms the other competitive state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge