Attention-based Domain Adaptation for Time Series Forecasting

Paper and Code

Feb 17, 2021

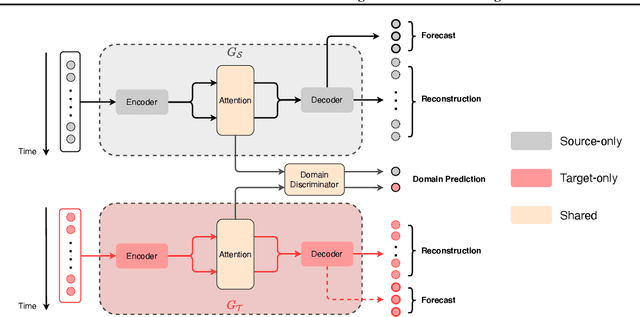

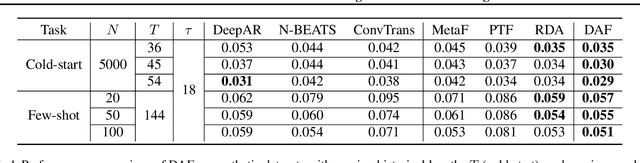

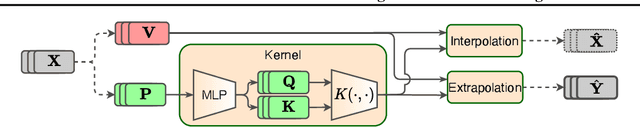

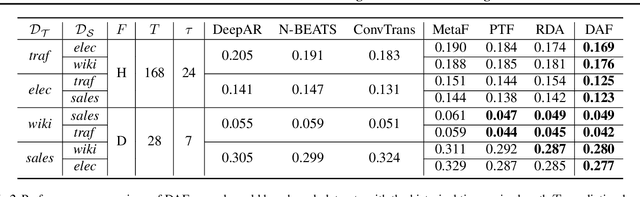

Recent years have witnessed deep neural networks gaining increasing popularity in the field of time series forecasting. A primary reason of their success is their ability to effectively capture complex temporal dynamics across multiple related time series. However, the advantages of these deep forecasters only start to emerge in the presence of a sufficient amount of data. This poses a challenge for typical forecasting problems in practice, where one either has a small number of time series, or limited observations per time series, or both. To cope with the issue of data scarcity, we propose a novel domain adaptation framework, Domain Adaptation Forecaster (DAF), that leverages the statistical strengths from another relevant domain with abundant data samples (source) to improve the performance on the domain of interest with limited data (target). In particular, we propose an attention-based shared module with a domain discriminator across domains as well as private modules for individual domains. This allows us to jointly train the source and target domains by generating domain-invariant latent features while retraining domain-specific features. Extensive experiments on various domains demonstrate that our proposed method outperforms state-of-the-art baselines on synthetic and real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge