ASPCNet: A Deep Adaptive Spatial Pattern Capsule Network for Hyperspectral Image Classification

Paper and Code

Apr 25, 2021

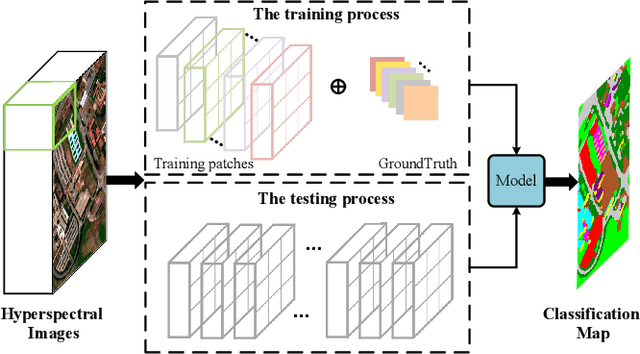

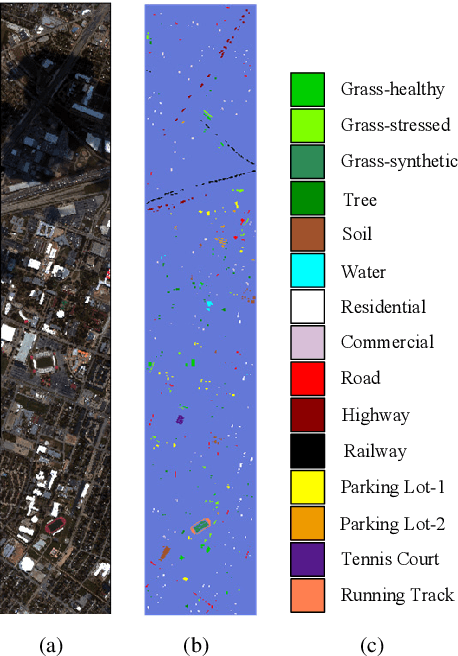

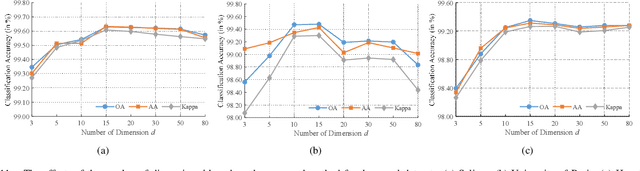

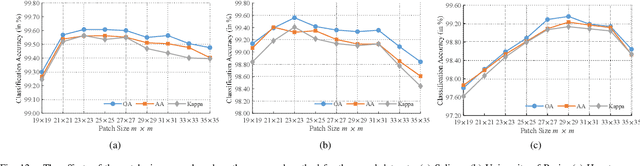

Previous studies have shown the great potential of capsule networks for the spatial contextual feature extraction from {hyperspectral images (HSIs)}. However, the sampling locations of the convolutional kernels of capsules are fixed and cannot be adaptively changed according to the inconsistent semantic information of HSIs. Based on this observation, this paper proposes an adaptive spatial pattern capsule network (ASPCNet) architecture by developing an adaptive spatial pattern (ASP) unit, that can rotate the sampling location of convolutional kernels on the basis of an enlarged receptive field. Note that this unit can learn more discriminative representations of HSIs with fewer parameters. Specifically, two cascaded ASP-based convolution operations (ASPConvs) are applied to input images to learn relatively high-level semantic features, transmitting hierarchical structures among capsules more accurately than the use of the most fundamental features. Furthermore, the semantic features are fed into ASP-based conv-capsule operations (ASPCaps) to explore the shapes of objects among the capsules in an adaptive manner, further exploring the potential of capsule networks. Finally, the class labels of image patches centered on test samples can be determined according to the fully connected capsule layer. Experiments on three public datasets demonstrate that ASPCNet can yield competitive performance with higher accuracies than state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge