An Optimization Framework with Flexible Inexact Inner Iterations for Nonconvex and Nonsmooth Programming

Paper and Code

Jun 30, 2017

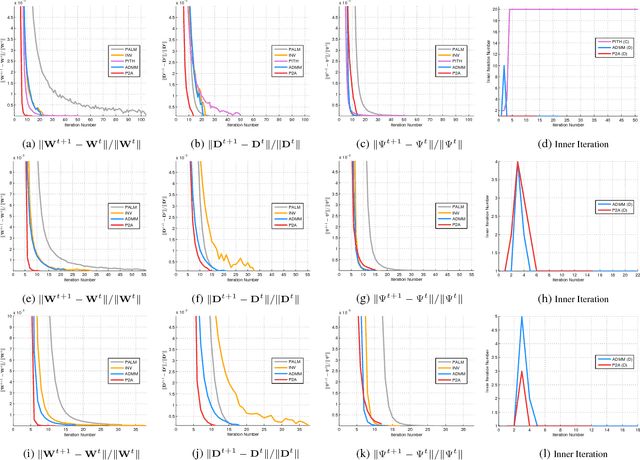

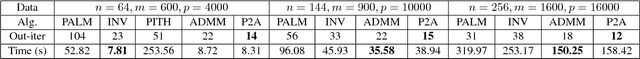

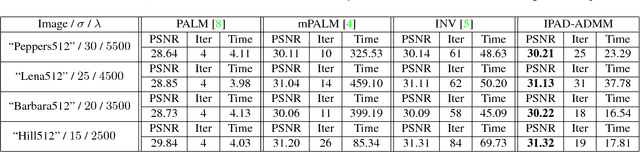

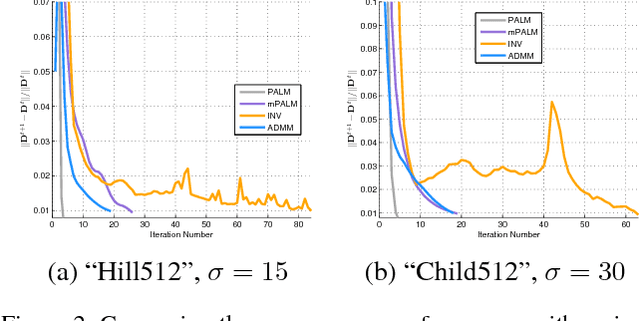

In recent years, numerous vision and learning tasks have been (re)formulated as nonconvex and nonsmooth programmings(NNPs). Although some algorithms have been proposed for particular problems, designing fast and flexible optimization schemes with theoretical guarantee is a challenging task for general NNPs. It has been investigated that performing inexact inner iterations often benefit to special applications case by case, but their convergence behaviors are still unclear. Motivated by these practical experiences, this paper designs a novel algorithmic framework, named inexact proximal alternating direction method (IPAD) for solving general NNPs. We demonstrate that any numerical algorithms can be incorporated into IPAD for solving subproblems and the convergence of the resulting hybrid schemes can be consistently guaranteed by a series of simple error conditions. Beyond the guarantee in theory, numerical experiments on both synthesized and real-world data further demonstrate the superiority and flexibility of our IPAD framework for practical use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge