An Optimal Transport Approach to Personalized Federated Learning

Paper and Code

Jun 06, 2022

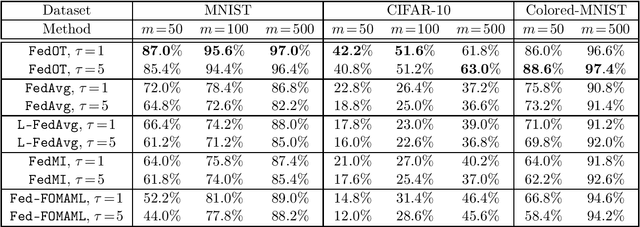

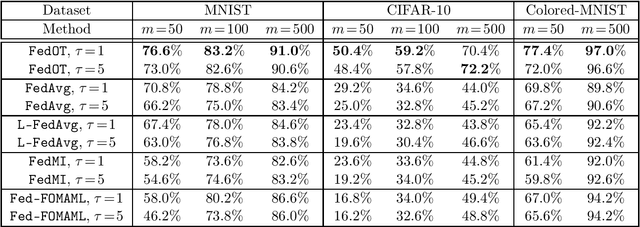

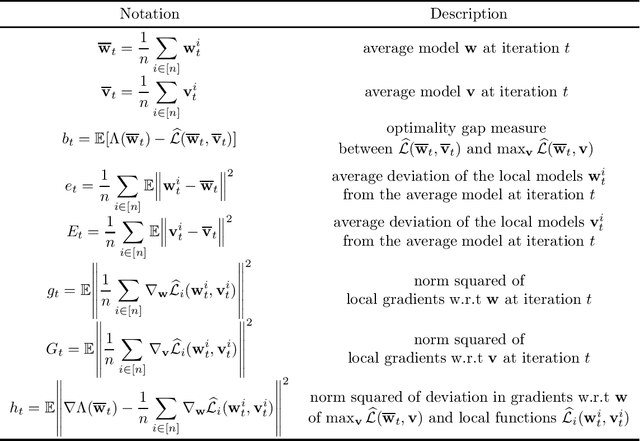

Federated learning is a distributed machine learning paradigm, which aims to train a model using the local data of many distributed clients. A key challenge in federated learning is that the data samples across the clients may not be identically distributed. To address this challenge, personalized federated learning with the goal of tailoring the learned model to the data distribution of every individual client has been proposed. In this paper, we focus on this problem and propose a novel personalized Federated Learning scheme based on Optimal Transport (FedOT) as a learning algorithm that learns the optimal transport maps for transferring data points to a common distribution as well as the prediction model under the applied transport map. To formulate the FedOT problem, we extend the standard optimal transport task between two probability distributions to multi-marginal optimal transport problems with the goal of transporting samples from multiple distributions to a common probability domain. We then leverage the results on multi-marginal optimal transport problems to formulate FedOT as a min-max optimization problem and analyze its generalization and optimization properties. We discuss the results of several numerical experiments to evaluate the performance of FedOT under heterogeneous data distributions in federated learning problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge