An End-To-End Stuttering Detection Method Based On Conformer And BILSTM

Paper and Code

Nov 14, 2024

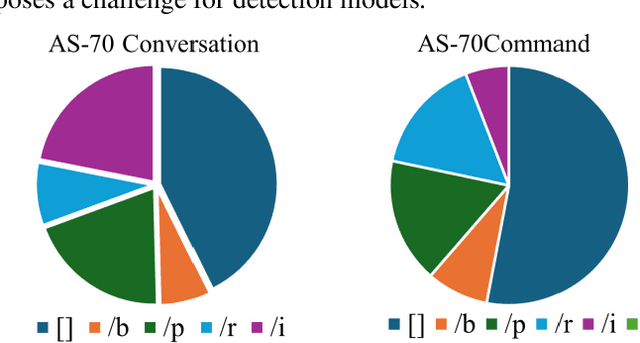

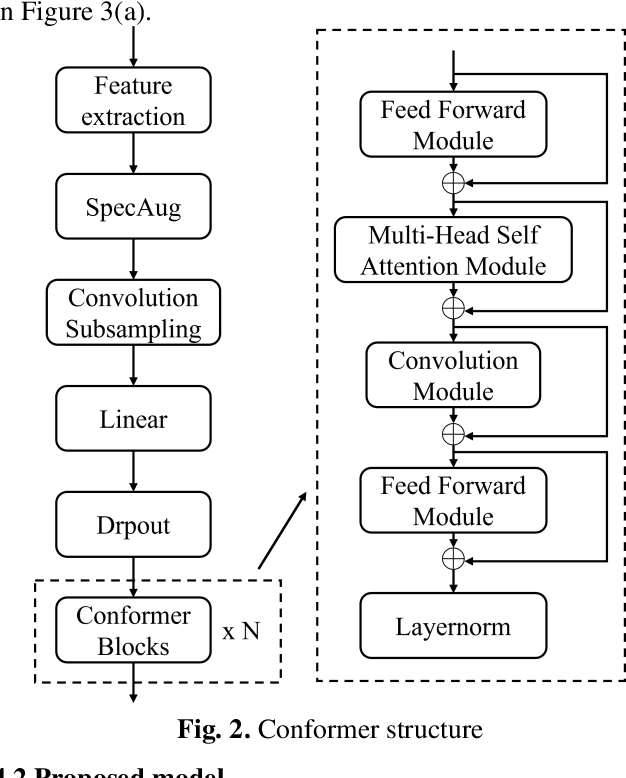

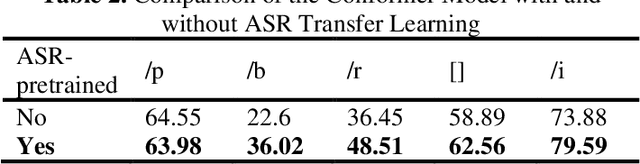

Stuttering is a neurodevelopmental speech disorder characterized by common speech symptoms such as pauses, exclamations, repetition, and prolongation. Speech-language pathologists typically assess the type and severity of stuttering by observing these symptoms. Many effective end-to-end methods exist for stuttering detection, but a commonly overlooked challenge is the uncertain relationship between tasks involved in this process. Using a suitable multi-task strategy could improve stuttering detection performance. This paper presents a novel stuttering event detection model designed to help speech-language pathologists assess both the type and severity of stuttering. First, the Conformer model extracts acoustic features from stuttered speech, followed by a Long Short-Term Memory (LSTM) network to capture contextual information. Finally, we explore multi-task learning for stuttering and propose an effective multi-task strategy. Experimental results show that our model outperforms current state-of-the-art methods for stuttering detection. In the SLT 2024 Stuttering Speech Challenge based on the AS-70 dataset [1], our model improved the mean F1 score by 24.8% compared to the baseline method and achieved first place. On this basis, we conducted relevant extensive experiments on LSTM and multi-task learning strategies respectively. The results show that our proposed method improved the mean F1 score by 39.8% compared to the baseline method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge