Align, Adapt and Inject: Sound-guided Unified Image Generation

Paper and Code

Jun 20, 2023

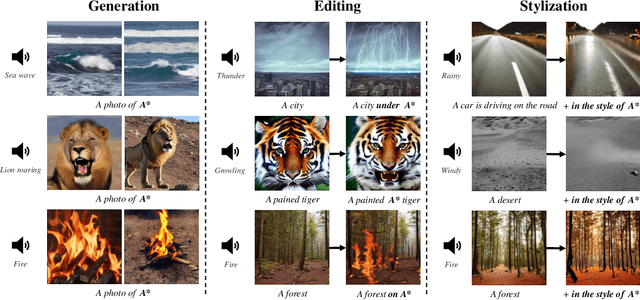

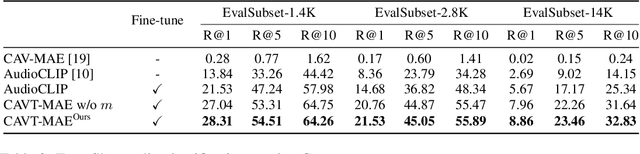

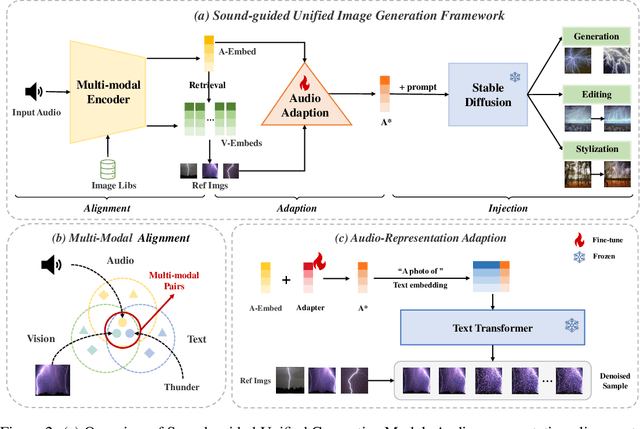

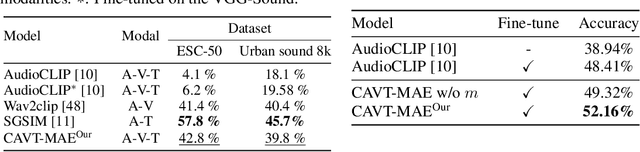

Text-guided image generation has witnessed unprecedented progress due to the development of diffusion models. Beyond text and image, sound is a vital element within the sphere of human perception, offering vivid representations and naturally coinciding with corresponding scenes. Taking advantage of sound therefore presents a promising avenue for exploration within image generation research. However, the relationship between audio and image supervision remains significantly underdeveloped, and the scarcity of related, high-quality datasets brings further obstacles. In this paper, we propose a unified framework 'Align, Adapt, and Inject' (AAI) for sound-guided image generation, editing, and stylization. In particular, our method adapts input sound into a sound token, like an ordinary word, which can plug and play with existing powerful diffusion-based Text-to-Image (T2I) models. Specifically, we first train a multi-modal encoder to align audio representation with the pre-trained textual manifold and visual manifold, respectively. Then, we propose the audio adapter to adapt audio representation into an audio token enriched with specific semantics, which can be injected into a frozen T2I model flexibly. In this way, we are able to extract the dynamic information of varied sounds, while utilizing the formidable capability of existing T2I models to facilitate sound-guided image generation, editing, and stylization in a convenient and cost-effective manner. The experiment results confirm that our proposed AAI outperforms other text and sound-guided state-of-the-art methods. And our aligned multi-modal encoder is also competitive with other approaches in the audio-visual retrieval and audio-text retrieval tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge