Algorithmic Recourse in the Face of Noisy Human Responses

Paper and Code

Mar 13, 2022

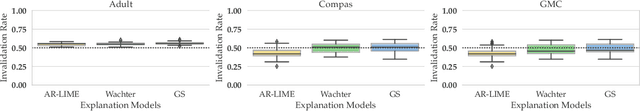

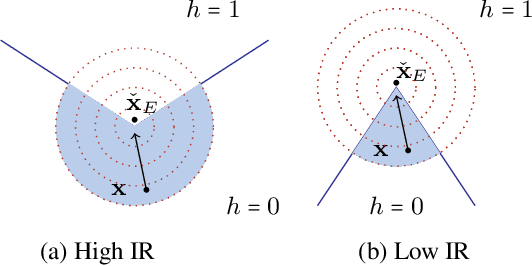

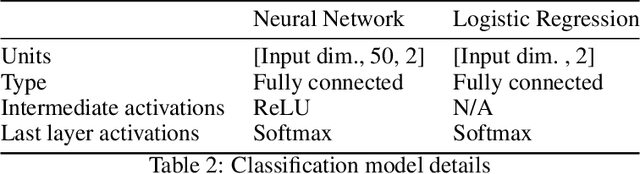

As machine learning (ML) models are increasingly being deployed in high-stakes applications, there has been growing interest in providing recourse to individuals adversely impacted by model predictions (e.g., an applicant whose loan has been denied). To this end, several post hoc techniques have been proposed in recent literature. These techniques generate recourses under the assumption that the affected individuals will implement the prescribed recourses exactly. However, recent studies suggest that individuals often implement recourses in a noisy and inconsistent manner - e.g., raising their salary by \$505 if the prescribed recourse suggested an increase of \$500. Motivated by this, we introduce and study the problem of recourse invalidation in the face of noisy human responses. More specifically, we theoretically and empirically analyze the behavior of state-of-the-art algorithms, and demonstrate that the recourses generated by these algorithms are very likely to be invalidated if small changes are made to them. We further propose a novel framework, EXPECTing noisy responses (EXPECT), which addresses the aforementioned problem by explicitly minimizing the probability of recourse invalidation in the face of noisy responses. Experimental evaluation with multiple real world datasets demonstrates the efficacy of the proposed framework, and supports our theoretical findings

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge