Agent-Centric Relation Graph for Object Visual Navigation

Paper and Code

Dec 07, 2021

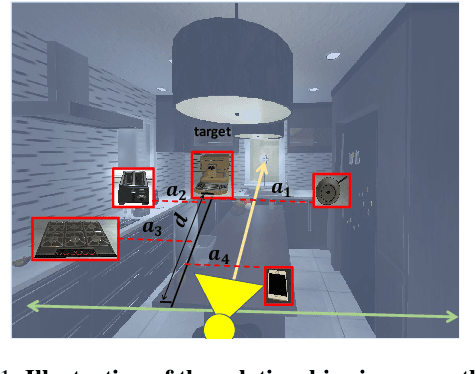

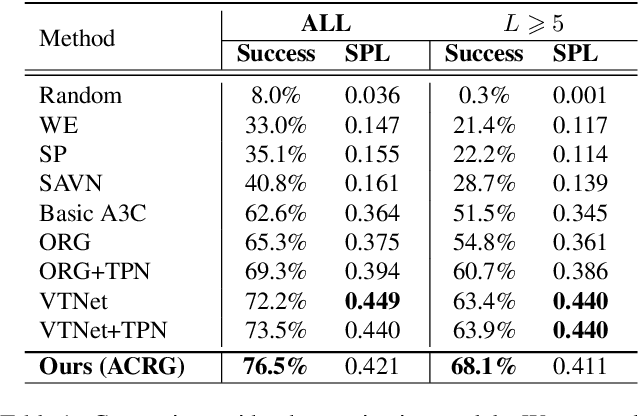

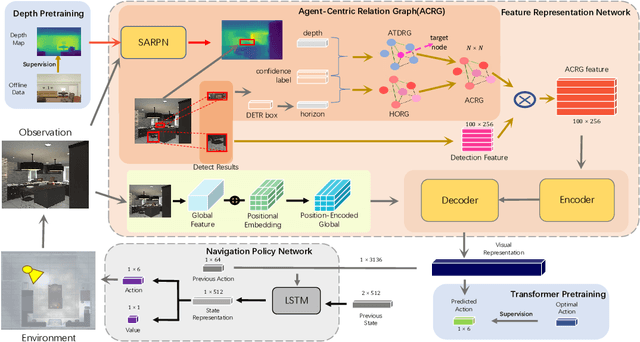

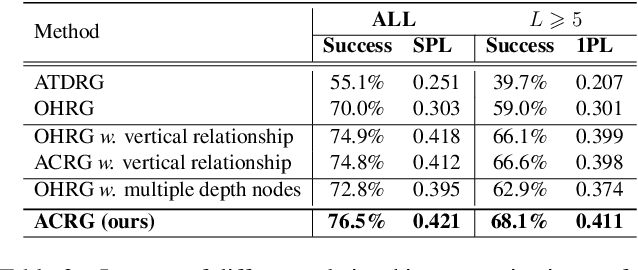

Object visual navigation aims to steer an agent towards a target object based on visual observations of the agent. It is highly desirable to reasonably perceive the environment and accurately control the agent. In the navigation task, we introduce an Agent-Centric Relation Graph (ACRG) for learning the visual representation based on the relationships in the environment. ACRG is a highly effective and reasonable structure that consists of two relationships, i.e., the relationship among objects and the relationship between the agent and the target. On the one hand, we design the Object Horizontal Relationship Graph (OHRG) that stores the relative horizontal location among objects. Note that the vertical relationship is not involved in OHRG, and we argue that OHRG is suitable for the control strategy. On the other hand, we propose the Agent-Target Depth Relationship Graph (ATDRG) that enables the agent to perceive the distance to the target. To achieve ATDRG, we utilize image depth to represent the distance. Given the above relationships, the agent can perceive the environment and output navigation actions. Given the visual representations constructed by ACRG and position-encoded global features, the agent can capture the target position to perform navigation actions. Experimental results in the artificial environment AI2-Thor demonstrate that ACRG significantly outperforms other state-of-the-art methods in unseen testing environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge