Adversarial Discriminative Heterogeneous Face Recognition

Paper and Code

Sep 12, 2017

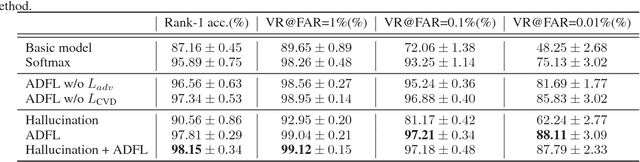

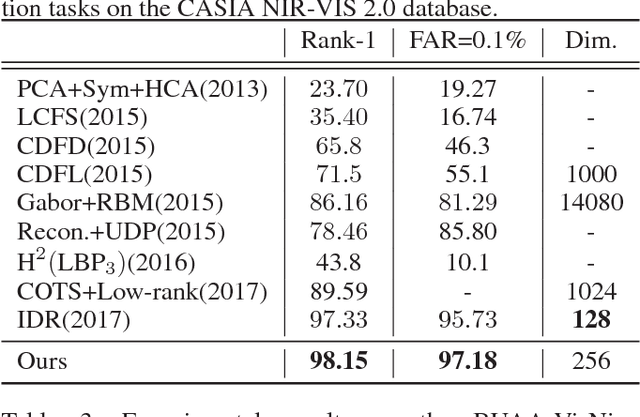

The gap between sensing patterns of different face modalities remains a challenging problem in heterogeneous face recognition (HFR). This paper proposes an adversarial discriminative feature learning framework to close the sensing gap via adversarial learning on both raw-pixel space and compact feature space. This framework integrates cross-spectral face hallucination and discriminative feature learning into an end-to-end adversarial network. In the pixel space, we make use of generative adversarial networks to perform cross-spectral face hallucination. An elaborate two-path model is introduced to alleviate the lack of paired images, which gives consideration to both global structures and local textures. In the feature space, an adversarial loss and a high-order variance discrepancy loss are employed to measure the global and local discrepancy between two heterogeneous distributions respectively. These two losses enhance domain-invariant feature learning and modality independent noise removing. Experimental results on three NIR-VIS databases show that our proposed approach outperforms state-of-the-art HFR methods, without requiring of complex network or large-scale training dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge