Adversarial 3D Human Pose Estimation via Multimodal Depth Supervision

Paper and Code

Sep 21, 2018

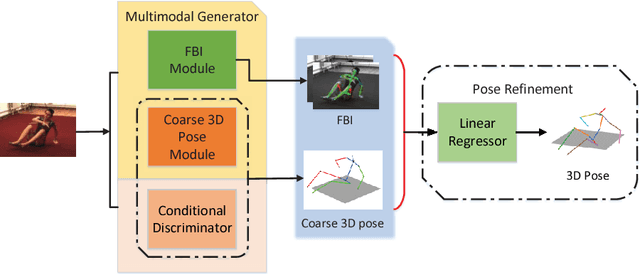

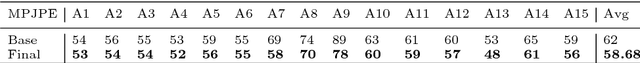

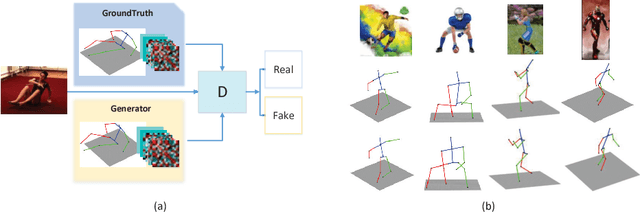

In this paper, a novel deep-learning based framework is proposed to infer 3D human poses from a single image. Specifically, a two-phase approach is developed. We firstly utilize a generator with two branches for the extraction of explicit and implicit depth information respectively. During the training process, an adversarial scheme is also employed to further improve the performance. The implicit and explicit depth information with the estimated 2D joints generated by a widely used estimator, in the second step, are together fed into a deep 3D pose regressor for the final pose generation. Our method achieves MPJPE of 58.68mm on the ECCV2018 3D Human Pose Estimation Challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge