Adaptive Power System Emergency Control using Deep Reinforcement Learning

Paper and Code

Mar 09, 2019

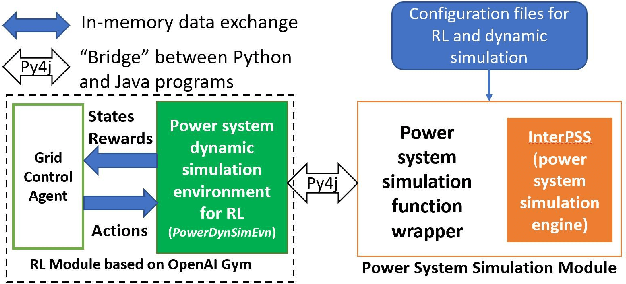

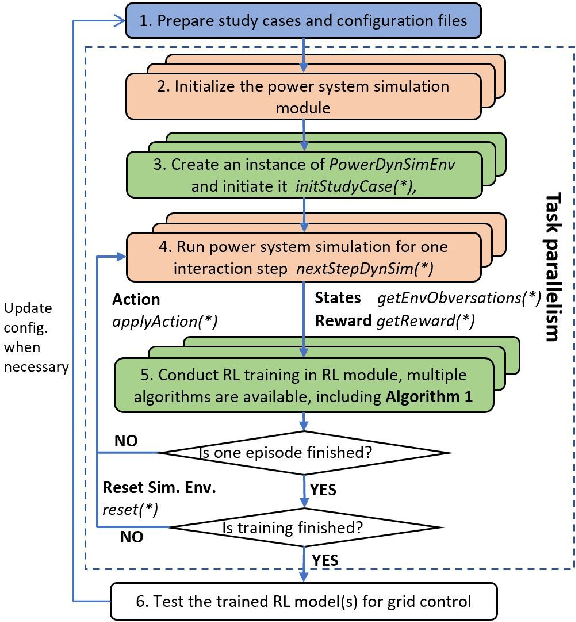

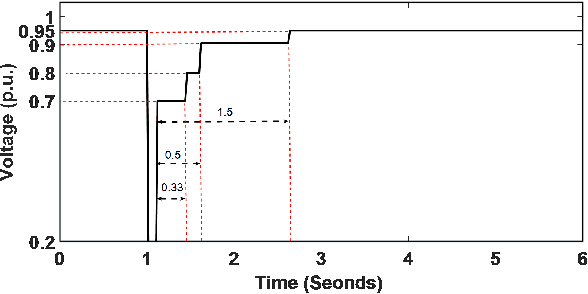

Power system emergency control is generally regarded as the last safety net for grid security and resiliency. Existing emergency control schemes are usually designed off-line based on either the conceived "worst" case scenario or a few typical operation scenarios. These schemes are facing significant adaptiveness and robustness issues as increasing uncertainties and variations occur in modern electrical grids. To address these challenges, for the first time, this paper developed novel adaptive emergency control schemes using deep reinforcement learning (DRL), by leveraging the high-dimensional feature extraction and non-linear generalization capabilities of DRL for complex power systems. Furthermore, an open-source platform named RLGC has been designed for the first time to assist the development and benchmarking of DRL algorithms for power system control. Details of the platform and DRL-based emergency control schemes for generator dynamic braking and under-voltage load shedding are presented. Extensive case studies performed in both two-area four-machine system and IEEE 39-Bus system have demonstrated the excellent performance and robustness of the proposed schemes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge