Adaptive Fine-Grained Predicates Learning for Scene Graph Generation

Paper and Code

Jul 11, 2022

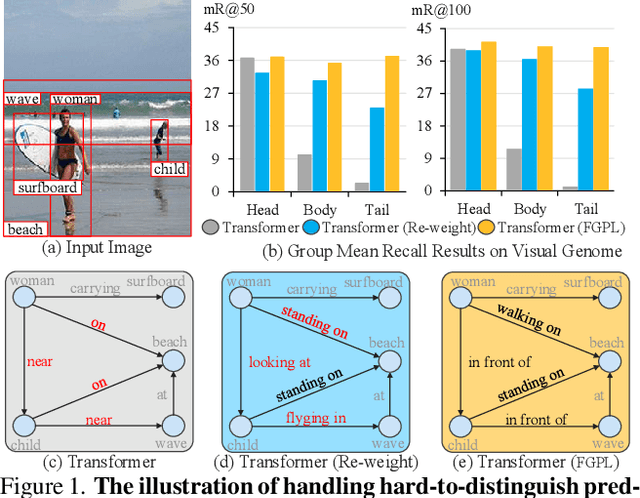

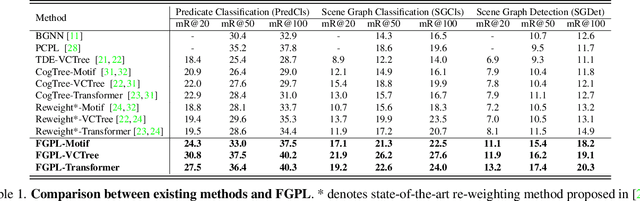

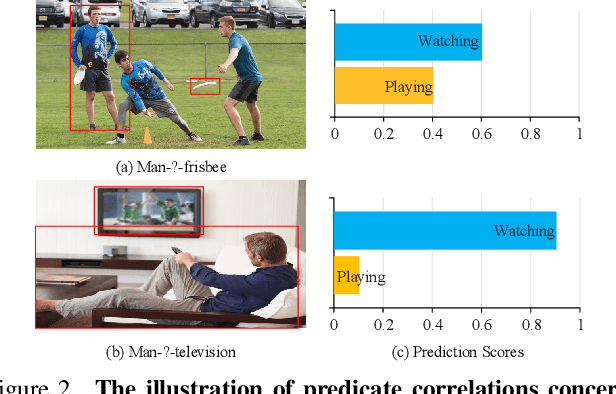

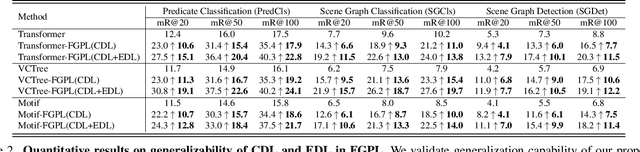

The performance of current Scene Graph Generation (SGG) models is severely hampered by hard-to-distinguish predicates, e.g., woman-on/standing on/walking on-beach. As general SGG models tend to predict head predicates and re-balancing strategies prefer tail categories, none of them can appropriately handle hard-to-distinguish predicates. To tackle this issue, inspired by fine-grained image classification, which focuses on differentiating hard-to-distinguish objects, we propose an Adaptive Fine-Grained Predicates Learning (FGPL-A) which aims at differentiating hard-to-distinguish predicates for SGG. First, we introduce an Adaptive Predicate Lattice (PL-A) to figure out hard-to-distinguish predicates, which adaptively explores predicate correlations in keeping with model's dynamic learning pace. Practically, PL-A is initialized from SGG dataset, and gets refined by exploring model's predictions of current mini-batch. Utilizing PL-A, we propose an Adaptive Category Discriminating Loss (CDL-A) and an Adaptive Entity Discriminating Loss (EDL-A), which progressively regularize model's discriminating process with fine-grained supervision concerning model's dynamic learning status, ensuring balanced and efficient learning process. Extensive experimental results show that our proposed model-agnostic strategy significantly boosts performance of benchmark models on VG-SGG and GQA-SGG datasets by up to 175% and 76% on Mean Recall@100, achieving new state-of-the-art performance. Moreover, experiments on Sentence-to-Graph Retrieval and Image Captioning tasks further demonstrate practicability of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge