Active Robot Vision for Distant Object Change Detection: A Lightweight Training Simulator Inspired by Multi-Armed Bandits

Paper and Code

Jul 26, 2023

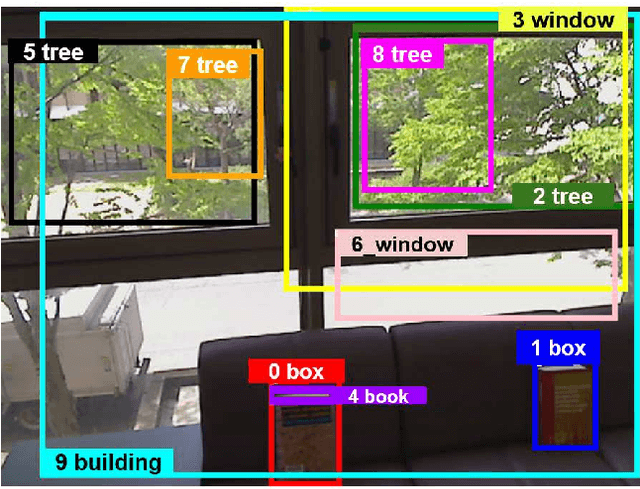

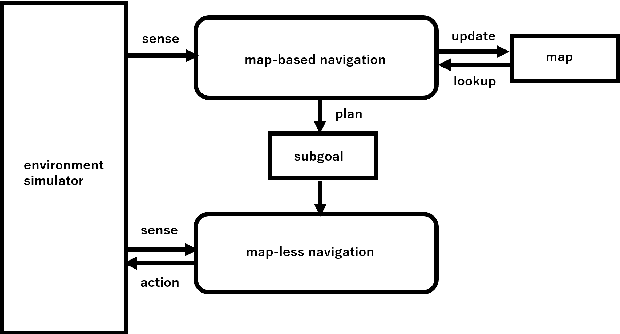

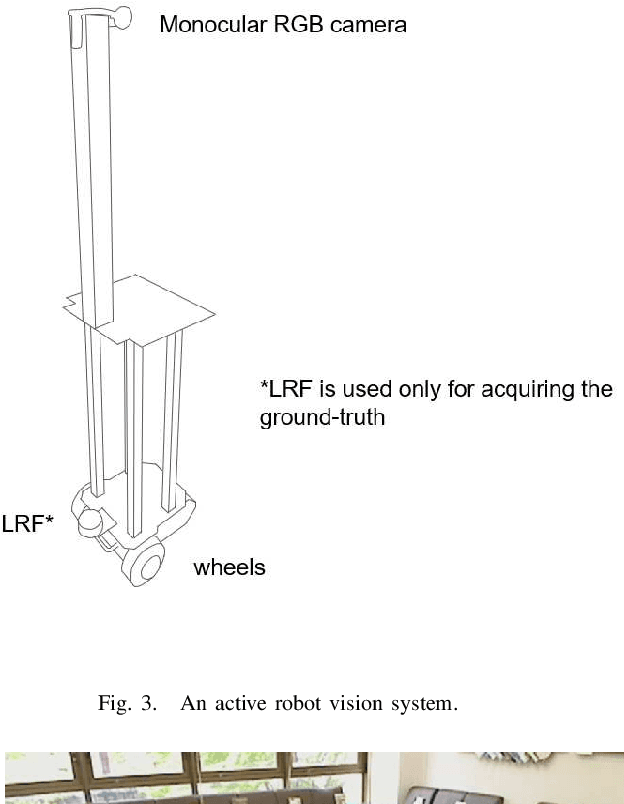

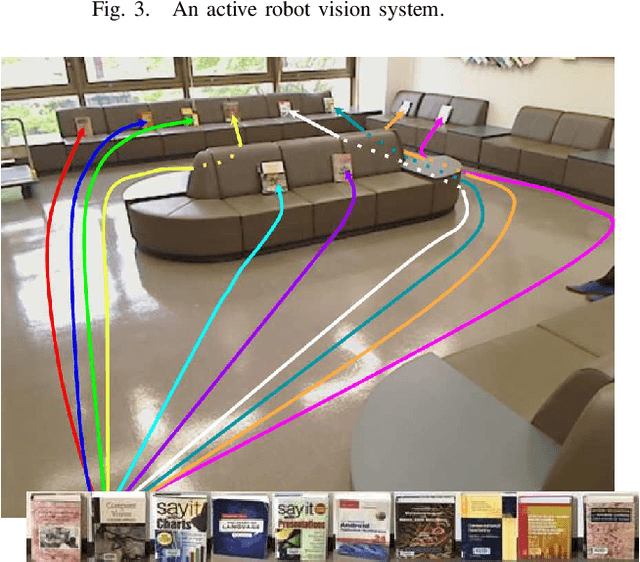

In ground-view object change detection, the recently emerging map-less navigation has great potential as a means of navigating a robot to distantly detected objects and identifying their changing states (appear/disappear/no-change) with high resolution imagery. However, the brute-force naive action strategy of navigating to every distant object requires huge sense/plan/action costs proportional to the number of objects. In this work, we study this new problem of ``Which distant objects should be prioritized for map-less navigation?" and in order to speed up the R{\&}D cycle, propose a highly-simplified approach that is easy to implement and easy to extend. In our approach, a new layer called map-based navigation is added on top of the map-less navigation, which constitutes a hierarchical planner. First, a dataset consisting of $N$ view sequences is acquired by a real robot via map-less navigation. Then, an environment simulator was built to simulate a simple action planning problem: ``Which view sequence should the robot select next?". Then, a solver was built inspired by the analogy to the multi-armed bandit problem: ``Which arm should the player select next?". Finally, the effectiveness of the proposed framework was verified using the semantically non-trivial scenario ``sofa as bookshelf".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge