Achieving Domain Robustness in Stereo Matching Networks by Removing Shortcut Learning

Paper and Code

Jun 15, 2021

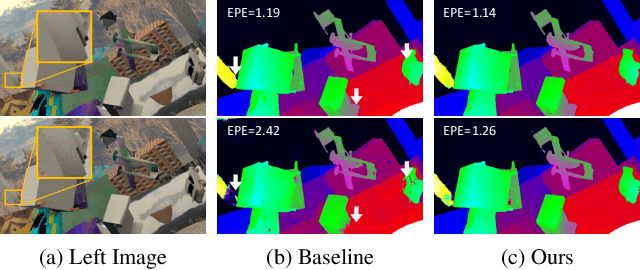

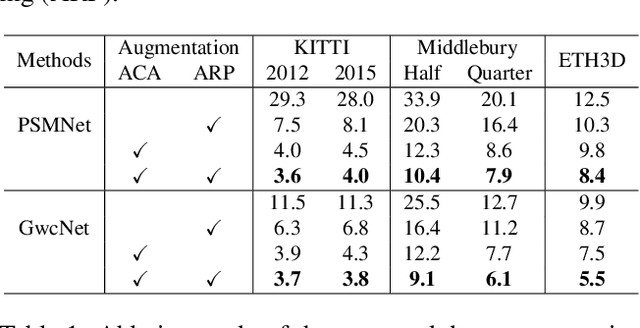

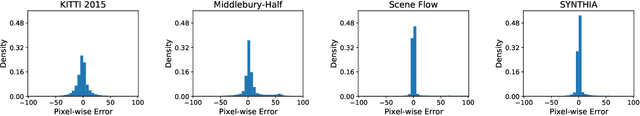

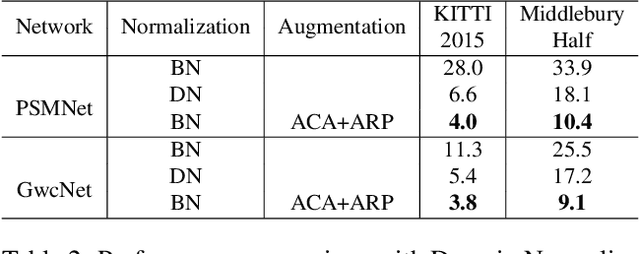

Learning-based stereo matching and depth estimation networks currently excel on public benchmarks with impressive results. However, state-of-the-art networks often fail to generalize from synthetic imagery to more challenging real data domains. This paper is an attempt to uncover hidden secrets of achieving domain robustness and in particular, discovering the important ingredients of generalization success of stereo matching networks by analyzing the effect of synthetic image learning on real data performance. We provide evidence that demonstrates that learning of features in the synthetic domain by a stereo matching network is heavily influenced by two "shortcuts" presented in the synthetic data: (1) identical local statistics (RGB colour features) between matching pixels in the synthetic stereo images and (2) lack of realism in synthetic textures on 3D objects simulated in game engines. We will show that by removing such shortcuts, we can achieve domain robustness in the state-of-the-art stereo matching frameworks and produce a remarkable performance on multiple realistic datasets, despite the fact that the networks were trained on synthetic data, only. Our experimental results point to the fact that eliminating shortcuts from the synthetic data is key to achieve domain-invariant generalization between synthetic and real data domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge