Absum: Simple Regularization Method for Reducing Structural Sensitivity of Convolutional Neural Networks

Paper and Code

Sep 19, 2019

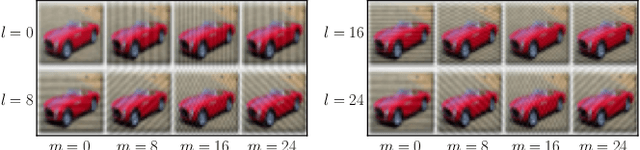

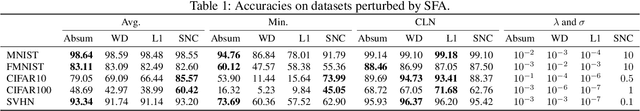

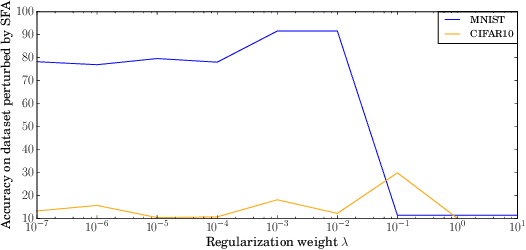

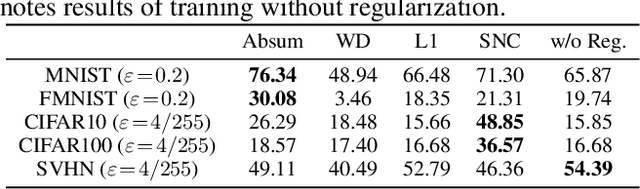

We propose Absum, which is a regularization method for improving adversarial robustness of convolutional neural networks (CNNs). Although CNNs can accurately recognize images, recent studies have shown that the convolution operations in CNNs commonly have structural sensitivity to specific noise composed of Fourier basis functions. By exploiting this sensitivity, they proposed a simple black-box adversarial attack: Single Fourier attack. To reduce structural sensitivity, we can use regularization of convolution filter weights since the sensitivity of linear transform can be assessed by the norm of the weights. However, standard regularization methods can prevent minimization of the loss function because they impose a tight constraint for obtaining high robustness. To solve this problem, Absum imposes a loose constraint; it penalizes the absolute values of the summation of the parameters in the convolution layers. Absum can improve robustness against single Fourier attack while being as simple and efficient as standard regularization methods (e.g., weight decay and L1 regularization). Our experiments demonstrate that Absum improves robustness against single Fourier attack more than standard regularization methods. Furthermore, we reveal that robust CNNs with Absum are more robust against transferred attacks due to decreasing the common sensitivity and against high-frequency noise than standard regularization methods. We also reveal that Absum can improve robustness against gradient-based attacks (projected gradient descent) when used with adversarial training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge