A Unified Collaborative Representation Learning for Neural-Network based Recommender Systems

Paper and Code

May 19, 2022

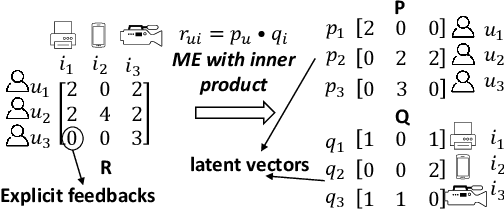

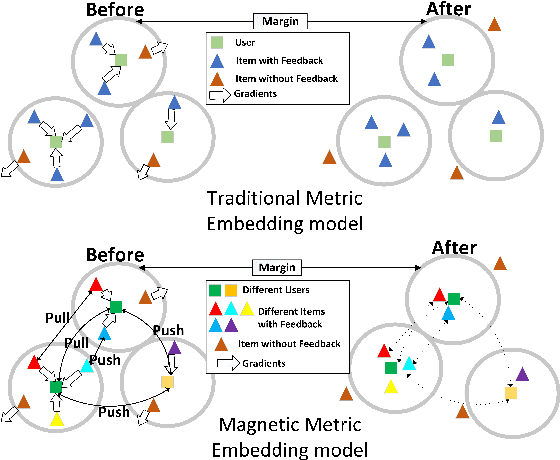

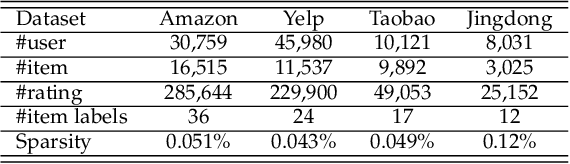

Most NN-RSs focus on accuracy by building representations from the direct user-item interactions (e.g., user-item rating matrix), while ignoring the underlying relatedness between users and items (e.g., users who rate the same ratings for the same items should be embedded into similar representations), which is an ideological disadvantage. On the other hand, ME models directly employ inner products as a default loss function metric that cannot project users and items into a proper latent space, which is a methodological disadvantage. In this paper, we propose a supervised collaborative representation learning model - Magnetic Metric Learning (MML) - to map users and items into a unified latent vector space, enhancing the representation learning for NN-RSs. Firstly, MML utilizes dual triplets to model not only the observed relationships between users and items, but also the underlying relationships between users as well as items to overcome the ideological disadvantage. Specifically, a modified metric-based dual loss function is proposed in MML to gather similar entities and disperse the dissimilar ones. With MML, we can easily compare all the relationships (user to user, item to item, user to item) according to the weighted metric, which overcomes the methodological disadvantage. We conduct extensive experiments on four real-world datasets with large item space. The results demonstrate that MML can learn a proper unified latent space for representations from the user-item matrix with high accuracy and effectiveness, and lead to a performance gain over the state-of-the-art RS models by an average of 17%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge