A two-level solution to fight against dishonest opinions in recommendation-based trust systems

Paper and Code

Jun 09, 2020

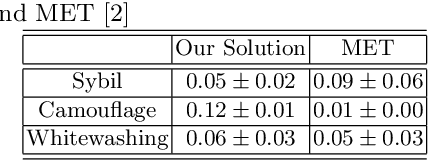

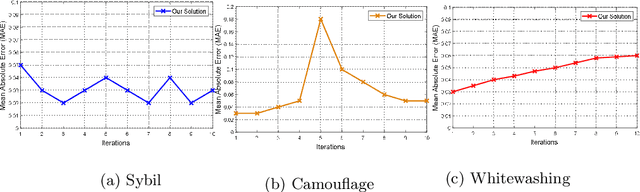

In this paper, we propose a mechanism to deal with dishonest opinions in recommendation-based trust models, at both the collection and processing levels. We consider a scenario in which an agent requests recommendations from multiple parties to build trust toward another agent. At the collection level, we propose to allow agents to self-assess the accuracy of their recommendations and autonomously decide on whether they would participate in the recommendation process or not. At the processing level, we propose a recommendations aggregation technique that is resilient to collusion attacks, followed by a credibility update mechanism for the participating agents. The originality of our work stems from its consideration of dishonest opinions at both the collection and processing levels, which allows for better and more persistent protection against dishonest recommenders. Experiments conducted on the Epinions dataset show that our solution yields better performance in protecting the recommendation process against Sybil attacks, in comparison with a competing model that derives the optimal network of advisors based on the agents' trust values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge