A Tight and Unified Analysis of Extragradient for a Whole Spectrum of Differentiable Games

Paper and Code

Jun 24, 2019

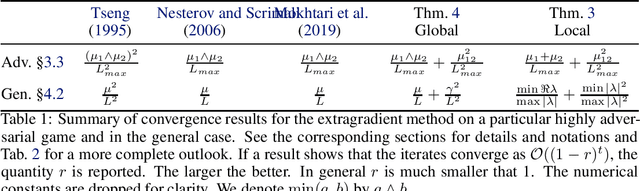

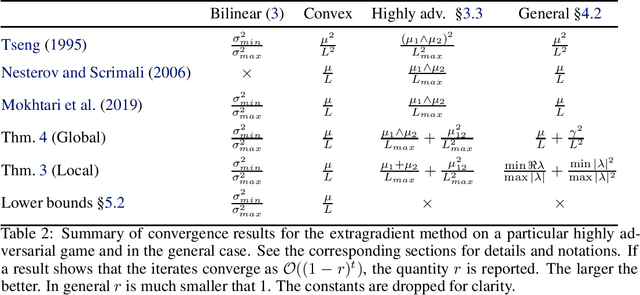

We consider differentiable games: multi-objective minimization problems, where the goal is to find a Nash equilibrium. The machine learning community has recently started using extrapolation-based variants of the gradient method. A prime example is the extragradient, which yields linear convergence in cases like bilinear games, where the standard gradient method fails. The full benefits of extrapolation-based methods are not known: i) there is no unified analysis for a large class of games that includes both strongly monotone and bilinear games; ii) it is not known whether the rate achieved by extragradient can be improved, e.g. by considering multiple extrapolation steps. We answer these questions through new analysis of the extragradient's local and global convergence properties. Our analysis covers the whole range of settings between purely bilinear and strongly monotone games. It reveals that extragradient converges via different mechanisms at these extremes; in between, it exploits the most favorable mechanism for the given problem. We then present lower bounds on the rate of convergence for a wide class of algorithms with any number of extrapolations. Our bounds prove that the extragradient achieves the optimal rate in this class, and that our upper bounds are tight. Our precise characterization of the extragradient's convergence behavior in games shows that, unlike in convex optimization, the extragradient method may be much faster than the gradient method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge