A Survey on the Robustness of Feature Importance and Counterfactual Explanations

Paper and Code

Oct 30, 2021

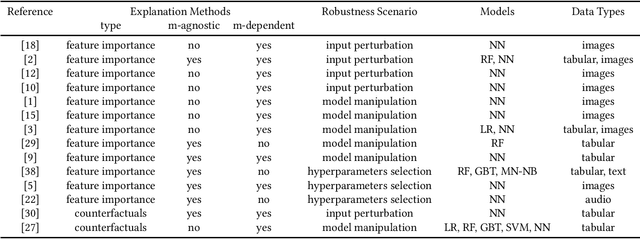

There exist several methods that aim to address the crucial task of understanding the behaviour of AI/ML models. Arguably, the most popular among them are local explanations that focus on investigating model behaviour for individual instances. Several methods have been proposed for local analysis, but relatively lesser effort has gone into understanding if the explanations are robust and accurately reflect the behaviour of underlying models. In this work, we present a survey of the works that analysed the robustness of two classes of local explanations (feature importance and counterfactual explanations) that are popularly used in analysing AI/ML models in finance. The survey aims to unify existing definitions of robustness, introduces a taxonomy to classify different robustness approaches, and discusses some interesting results. Finally, the survey introduces some pointers about extending current robustness analysis approaches so as to identify reliable explainability methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge