A Semantic-Loss Function Modeling Framework With Task-Oriented Machine Learning Perspectives

Paper and Code

Mar 12, 2025

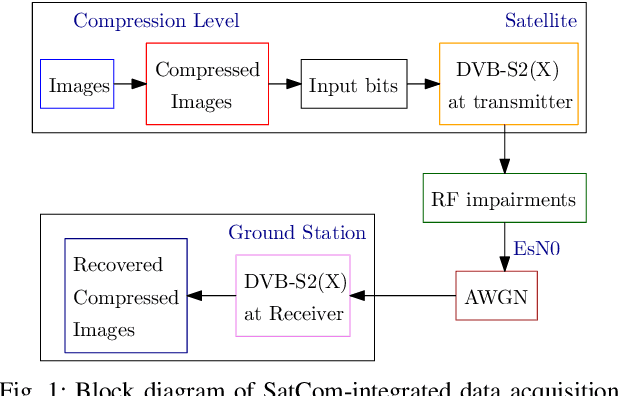

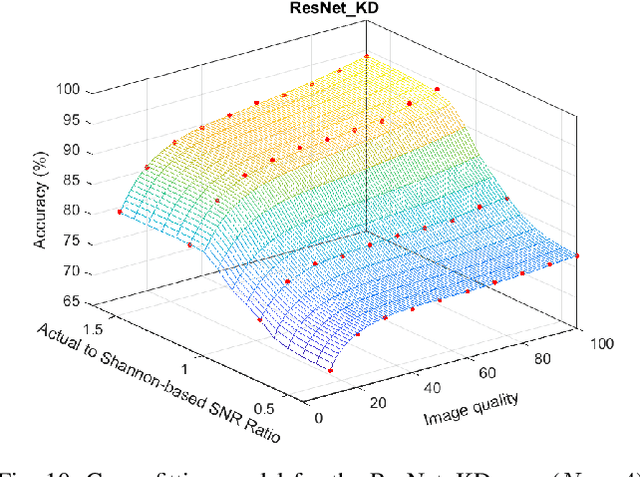

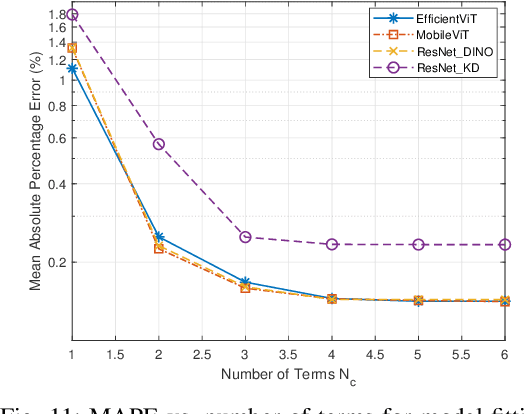

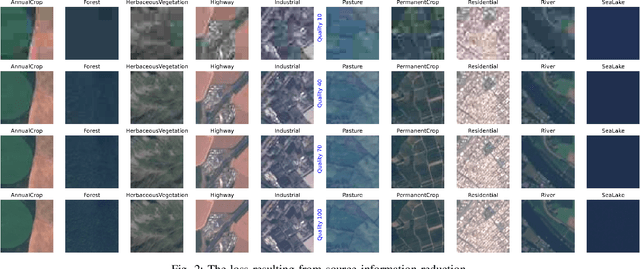

The integration of machine learning (ML) has significantly enhanced the capabilities of Earth Observation (EO) systems by enabling the extraction of actionable insights from complex datasets. However, the performance of data-driven EO applications is heavily influenced by the data collection and transmission processes, where limited satellite bandwidth and latency constraints can hinder the full transmission of original data to the receivers. To address this issue, adopting the concepts of Semantic Communication (SC) offers a promising solution by prioritizing the transmission of essential data semantics over raw information. Implementing SC for EO systems requires a thorough understanding of the impact of data processing and communication channel conditions on semantic loss at the processing center. This work proposes a novel data-fitting framework to empirically model the semantic loss using real-world EO datasets and domain-specific insights. The framework quantifies two primary types of semantic loss: (1) source coding loss, assessed via a data quality indicator measuring the impact of processing on raw source data, and (2) transmission loss, evaluated by comparing practical transmission performance against the Shannon limit. Semantic losses are estimated by evaluating the accuracy of EO applications using four task-oriented ML models, EfficientViT, MobileViT, ResNet50-DINO, and ResNet8-KD, on lossy image datasets under varying channel conditions and compression ratios. These results underpin a framework for efficient semantic-loss modeling in bandwidth-constrained EO scenarios, enabling more reliable and effective operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge