A relic sketch extraction framework based on detail-aware hierarchical deep network

Paper and Code

Jan 17, 2021

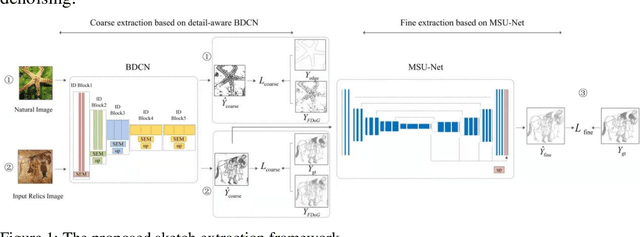

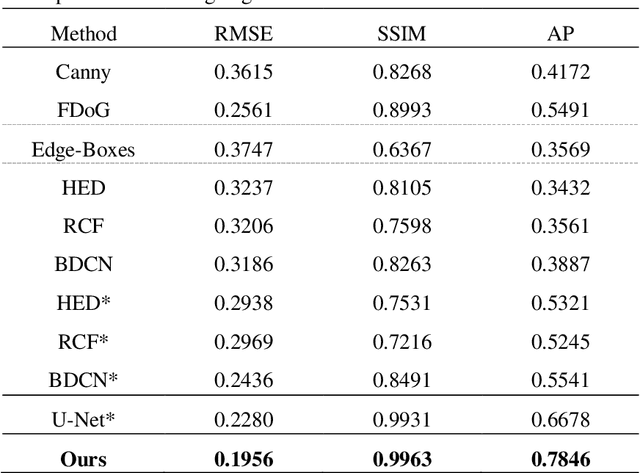

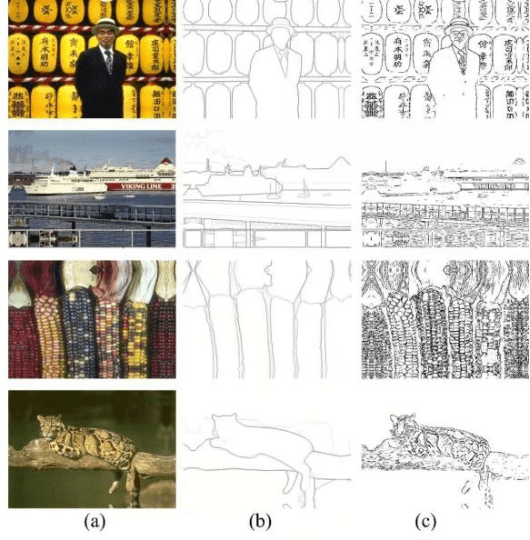

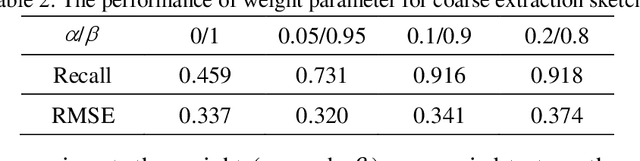

As the first step of the restoration process of painted relics, sketch extraction plays an important role in cultural research. However, sketch extraction suffers from serious disease corrosion, which results in broken lines and noise. To overcome these problems, we propose a deep learning-based hierarchical sketch extraction framework for painted cultural relics. We design the sketch extraction process into two stages: coarse extraction and fine extraction. In the coarse extraction stage, we develop a novel detail-aware bi-directional cascade network that integrates flow-based difference-of-Gaussians (FDoG) edge detection and a bi-directional cascade network (BDCN) under a transfer learning framework. It not only uses the pre-trained strategy to extenuate the requirements of large datasets for deep network training but also guides the network to learn the detail characteristics by the prior knowledge from FDoG. For the fine extraction stage, we design a new multiscale U-Net (MSU-Net) to effectively remove disease noise and refine the sketch. Specifically, all the features extracted from multiple intermediate layers in the decoder of MSU-Net are fused for sketch predication. Experimental results showed that the proposed method outperforms the other seven state-of-the-art methods in terms of visual and quantitative metrics and can also deal with complex backgrounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge