A Real-Time Cross-modality Correlation Filtering Method for Referring Expression Comprehension

Paper and Code

Sep 24, 2019

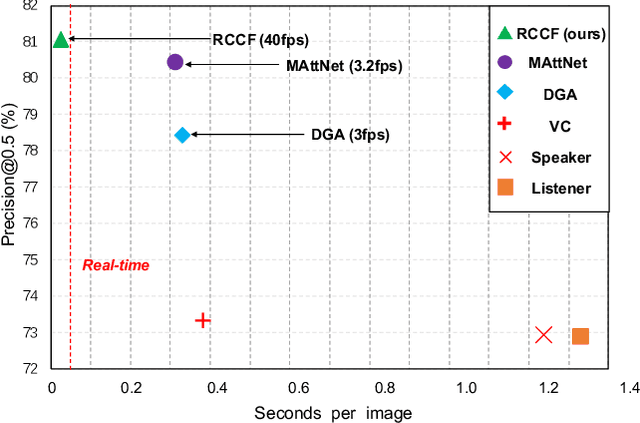

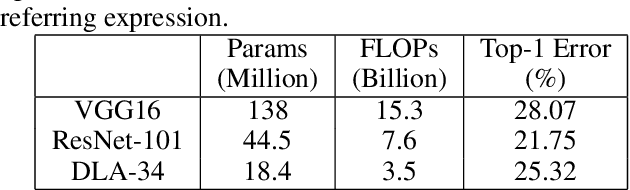

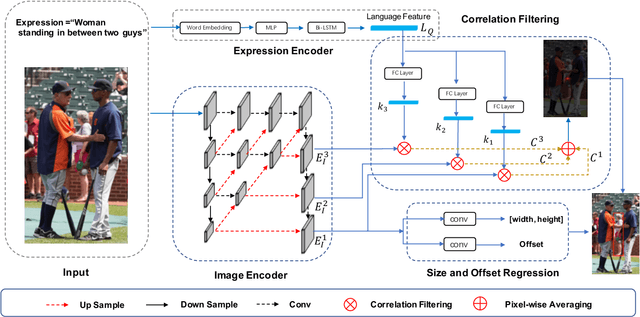

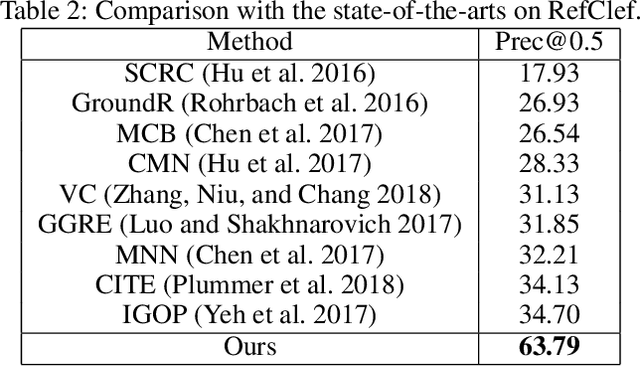

Referring expression comprehension aims to localize the object instance described by a natural language expression. Current referring expression methods have achieved pretty-well performance. However, none of them is able to achieve real-time inference without accuracy drop. The reason for the relatively slow inference speed is that these methods artificially split the referring expression comprehension into two sequential stages including proposal generation and proposal ranking. It does not exactly conform to the habit of human cognition. To this end, we propose a novel Real-time Cross-modality Correlation Filtering method (RCCF). RCCF reformulates the referring expression as a correlation filtering process. The expression is first mapped from the language domain to the visual domain and then treated as a template (kernel) to perform correlation filtering on the image feature map. The peak value in the correlation heatmap indicates the center points of the target box. In addition, RCCF also regresses a 2-D object size and 2-D offset. The center point coordinates, object size and center point offset together form the target bounding-box. Our method runs at 40 FPS while achieves leading performance in RefClef, RefCOCO, RefCOCO+, and RefCOCOg benchmarks. In the challenge RefClef dataset, our methods almost double the state-of-the-art performance(34.70% increased to 63.79%). We hope this work can arouse more attention and studies to the new cross-modality correlation filtering framework as well as the one-stage framework for referring expression comprehension.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge