A deep residual learning implementation of Metamorphosis

Paper and Code

Feb 01, 2022

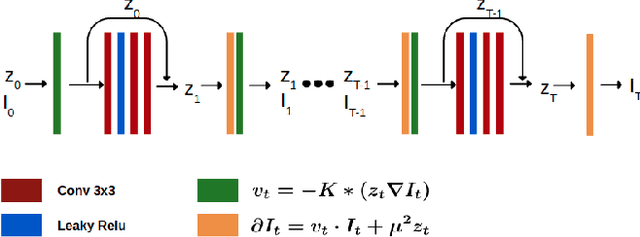

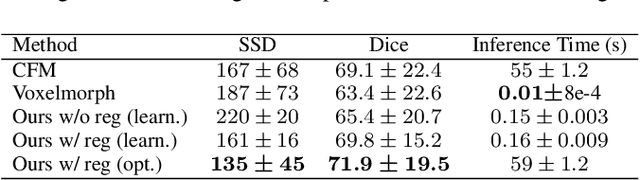

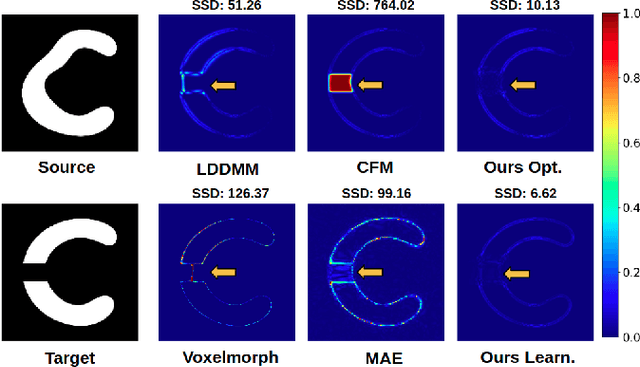

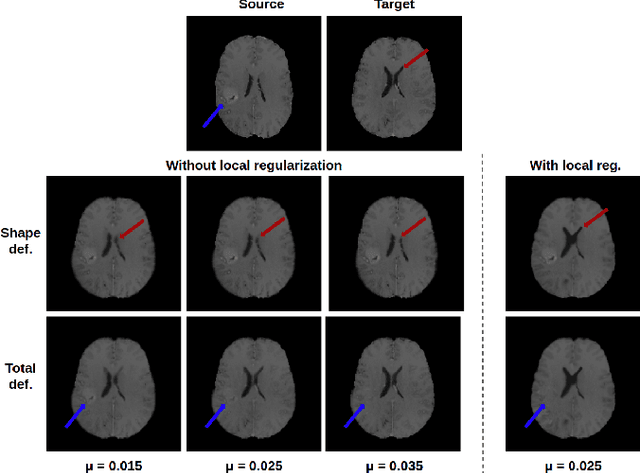

In medical imaging, most of the image registration methods implicitly assume a one-to-one correspondence between the source and target images (i.e., diffeomorphism). However, this is not necessarily the case when dealing with pathological medical images (e.g., presence of a tumor, lesion, etc.). To cope with this issue, the Metamorphosis model has been proposed. It modifies both the shape and the appearance of an image to deal with the geometrical and topological differences. However, the high computational time and load have hampered its applications so far. Here, we propose a deep residual learning implementation of Metamorphosis that drastically reduces the computational time at inference. Furthermore, we also show that the proposed framework can easily integrate prior knowledge of the localization of topological changes (e.g., segmentation masks) that can act as spatial regularization to correctly disentangle appearance and shape changes. We test our method on the BraTS 2021 dataset, showing that it outperforms current state-of-the-art methods in the alignment of images with brain tumors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge