A Decentralized Reinforcement Learning Framework for Efficient Passage of Emergency Vehicles

Paper and Code

Nov 08, 2021

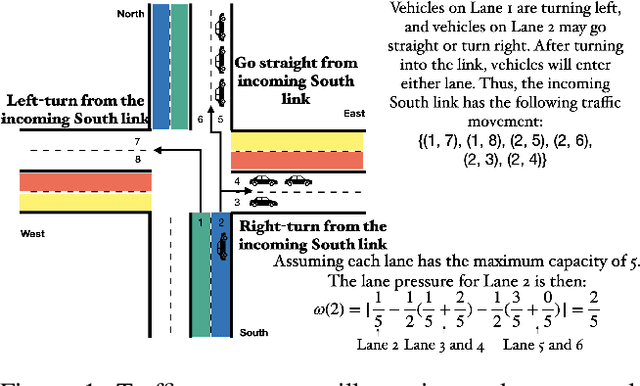

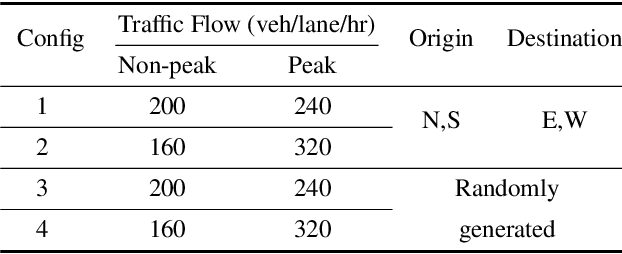

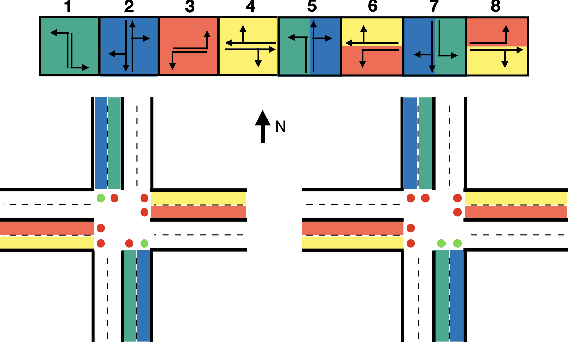

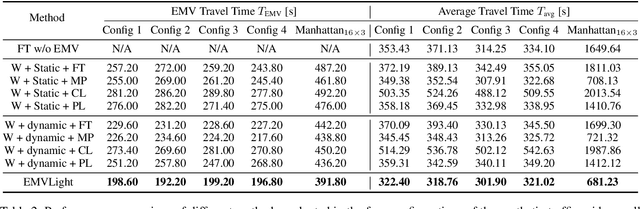

Emergency vehicles (EMVs) play a critical role in a city's response to time-critical events such as medical emergencies and fire outbreaks. The existing approaches to reduce EMV travel time employ route optimization and traffic signal pre-emption without accounting for the coupling between route these two subproblems. As a result, the planned route often becomes suboptimal. In addition, these approaches also do not focus on minimizing disruption to the overall traffic flow. To address these issues, we introduce EMVLight in this paper. This is a decentralized reinforcement learning (RL) framework for simultaneous dynamic routing and traffic signal control. EMVLight extends Dijkstra's algorithm to efficiently update the optimal route for an EMV in real-time as it travels through the traffic network. Consequently, the decentralized RL agents learn network-level cooperative traffic signal phase strategies that reduce EMV travel time and the average travel time of non-EMVs in the network. We have carried out comprehensive experiments with synthetic and real-world maps to demonstrate this benefit. Our results show that EMVLight outperforms benchmark transportation engineering techniques as well as existing RL-based traffic signal control methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge