A Consistency-Based Loss for Deep Odometry Through Uncertainty Propagation

Paper and Code

Jul 01, 2021

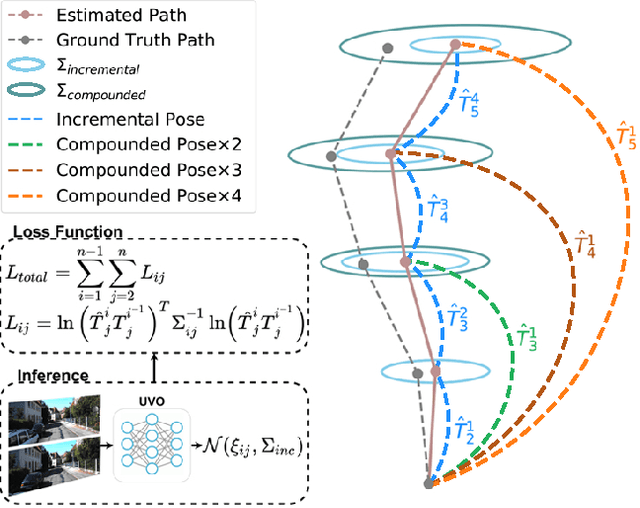

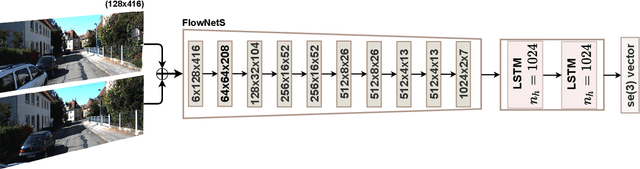

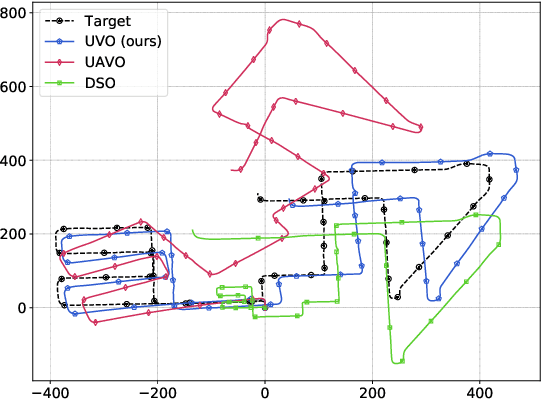

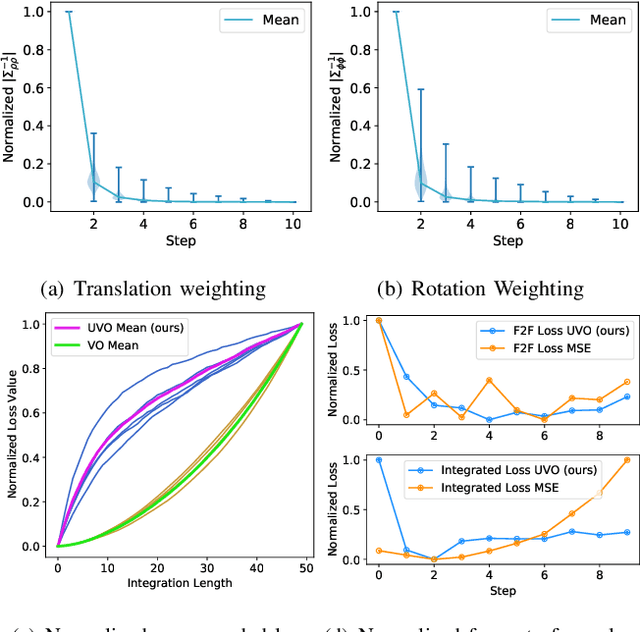

The incremental poses computed through odometry can be integrated over time to calculate the pose of a device with respect to an initial location. The resulting global pose may be used to formulate a second, consistency based, loss term in a deep odometry setting. In such cases where multiple losses are imposed on a network, the uncertainty over each output can be derived to weigh the different loss terms in a maximum likelihood setting. However, when imposing a constraint on the integrated transformation, due to how only odometry is estimated at each iteration of the algorithm, there is no information about the uncertainty associated with the global pose to weigh the global loss term. In this paper, we associate uncertainties with the output poses of a deep odometry network and propagate the uncertainties through each iteration. Our goal is to use the estimated covariance matrix at each incremental step to weigh the loss at the corresponding step while weighting the global loss term using the compounded uncertainty. This formulation provides an adaptive method to weigh the incremental and integrated loss terms against each other, noting the increase in uncertainty as new estimates arrive. We provide quantitative and qualitative analysis of pose estimates and show that our method surpasses the accuracy of the state-of-the-art Visual Odometry approaches. Then, uncertainty estimates are evaluated and comparisons against fixed baselines are provided. Finally, the uncertainty values are used in a realistic example to show the effectiveness of uncertainty quantification for localization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge