A Benchmark for Automatic Medical Consultation System: Frameworks, Tasks and Datasets

Paper and Code

Apr 27, 2022

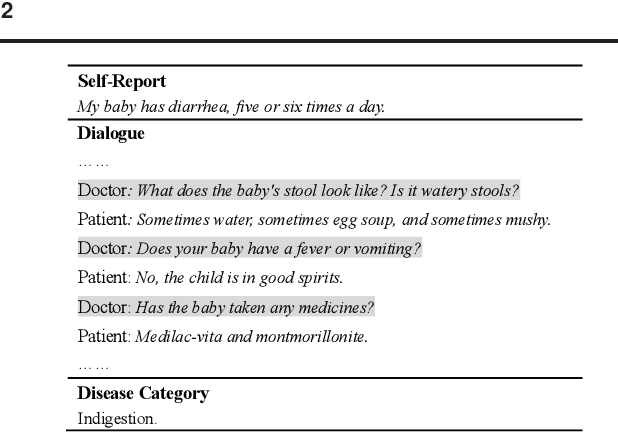

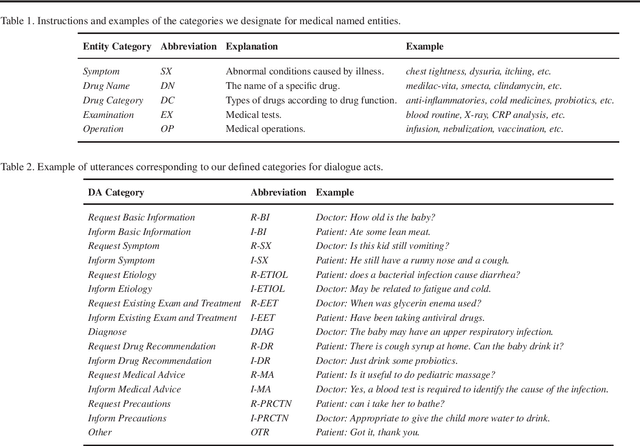

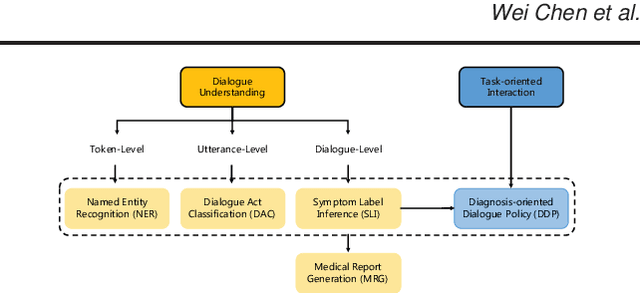

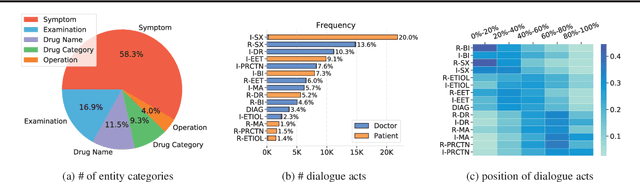

In recent years, interest has arisen in using machine learning to improve the efficiency of automatic medical consultation and enhance patient experience. In this paper, we propose two frameworks to support automatic medical consultation, namely doctor-patient dialogue understanding and task-oriented interaction. A new large medical dialogue dataset with multi-level fine-grained annotations is introduced and five independent tasks are established, including named entity recognition, dialogue act classification, symptom label inference, medical report generation and diagnosis-oriented dialogue policy. We report a set of benchmark results for each task, which shows the usability of the dataset and sets a baseline for future studies.

* 8 pages, 3 figures, 10 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge