Zuolin Tu

NeoRL-2: Near Real-World Benchmarks for Offline Reinforcement Learning with Extended Realistic Scenarios

Mar 25, 2025

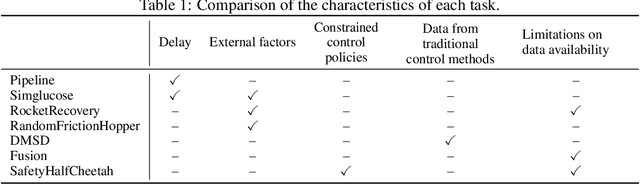

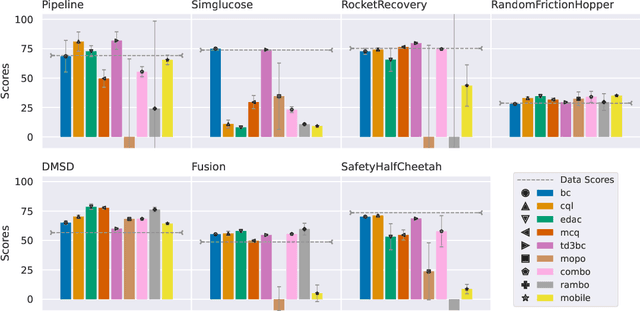

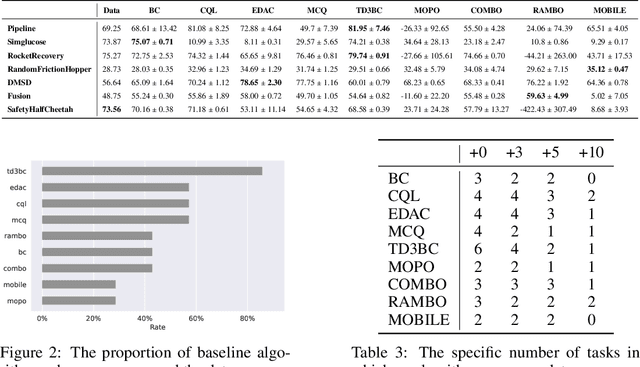

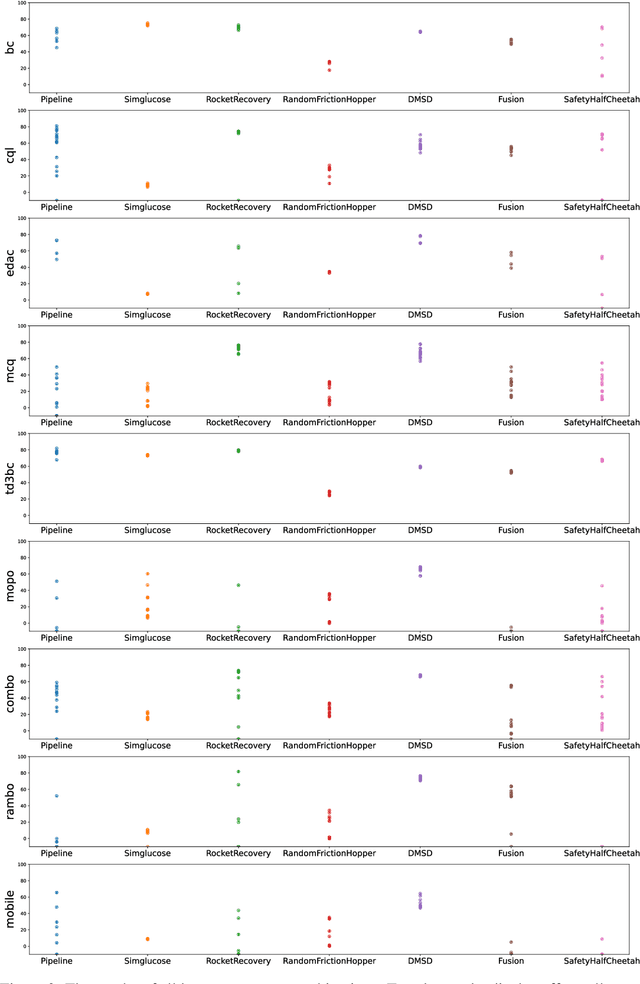

Abstract:Offline reinforcement learning (RL) aims to learn from historical data without requiring (costly) access to the environment. To facilitate offline RL research, we previously introduced NeoRL, which highlighted that datasets from real-world tasks are often conservative and limited. With years of experience applying offline RL to various domains, we have identified additional real-world challenges. These include extremely conservative data distributions produced by deployed control systems, delayed action effects caused by high-latency transitions, external factors arising from the uncontrollable variance of transitions, and global safety constraints that are difficult to evaluate during the decision-making process. These challenges are underrepresented in previous benchmarks but frequently occur in real-world tasks. To address this, we constructed the extended Near Real-World Offline RL Benchmark (NeoRL-2), which consists of 7 datasets from 7 simulated tasks along with their corresponding evaluation simulators. Benchmarking results from state-of-the-art offline RL approaches demonstrate that current methods often struggle to outperform the data-collection behavior policy, highlighting the need for more effective methods. We hope NeoRL-2 will accelerate the development of reinforcement learning algorithms for real-world applications. The benchmark project page is available at https://github.com/polixir/NeoRL2.

Efficient Recurrent Off-Policy RL Requires a Context-Encoder-Specific Learning Rate

May 24, 2024

Abstract:Real-world decision-making tasks are usually partially observable Markov decision processes (POMDPs), where the state is not fully observable. Recent progress has demonstrated that recurrent reinforcement learning (RL), which consists of a context encoder based on recurrent neural networks (RNNs) for unobservable state prediction and a multilayer perceptron (MLP) policy for decision making, can mitigate partial observability and serve as a robust baseline for POMDP tasks. However, previous recurrent RL methods face training stability issues due to the gradient instability of RNNs. In this paper, we propose Recurrent Off-policy RL with Context-Encoder-Specific Learning Rate (RESeL) to tackle this issue. Specifically, RESeL uses a lower learning rate for context encoder than other MLP layers to ensure the stability of the former while maintaining the training efficiency of the latter. We integrate this technique into existing off-policy RL methods, resulting in the RESeL algorithm. We evaluated RESeL in 18 POMDP tasks, including classic, meta-RL, and credit assignment scenarios, as well as five MDP locomotion tasks. The experiments demonstrate significant improvements in training stability with RESeL. Comparative results show that RESeL achieves notable performance improvements over previous recurrent RL baselines in POMDP tasks, and is competitive with or even surpasses state-of-the-art methods in MDP tasks. Further ablation studies highlight the necessity of applying a distinct learning rate for the context encoder.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge