Zipeng Ji

SA-GNAS: Seed Architecture Expansion for Efficient Large-scale Graph Neural Architecture Search

Dec 03, 2024

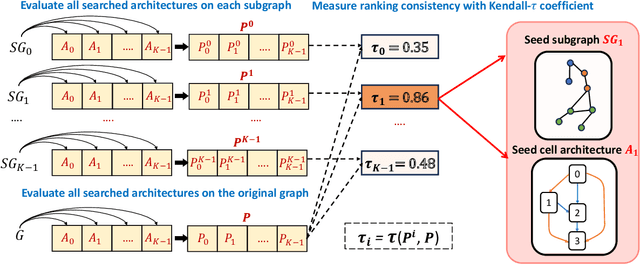

Abstract:GNAS (Graph Neural Architecture Search) has demonstrated great effectiveness in automatically designing the optimal graph neural architectures for multiple downstream tasks, such as node classification and link prediction. However, most existing GNAS methods cannot efficiently handle large-scale graphs containing more than million-scale nodes and edges due to the expensive computational and memory overhead. To scale GNAS on large graphs while achieving better performance, we propose SA-GNAS, a novel framework based on seed architecture expansion for efficient large-scale GNAS. Similar to the cell expansion in biotechnology, we first construct a seed architecture and then expand the seed architecture iteratively. Specifically, we first propose a performance ranking consistency-based seed architecture selection method, which selects the architecture searched on the subgraph that best matches the original large-scale graph. Then, we propose an entropy minimization-based seed architecture expansion method to further improve the performance of the seed architecture. Extensive experimental results on five large-scale graphs demonstrate that the proposed SA-GNAS outperforms human-designed state-of-the-art GNN architectures and existing graph NAS methods. Moreover, SA-GNAS can significantly reduce the search time, showing better search efficiency. For the largest graph with billion edges, SA-GNAS can achieve 2.8 times speedup compared to the SOTA large-scale GNAS method GAUSS. Additionally, since SA-GNAS is inherently parallelized, the search efficiency can be further improved with more GPUs. SA-GNAS is available at https://github.com/PasaLab/SAGNAS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge