Zhenyu Tao

A Theoretical Analysis of State Similarity Between Markov Decision Processes

Dec 19, 2025

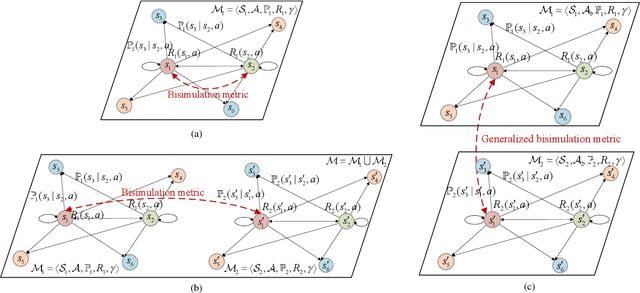

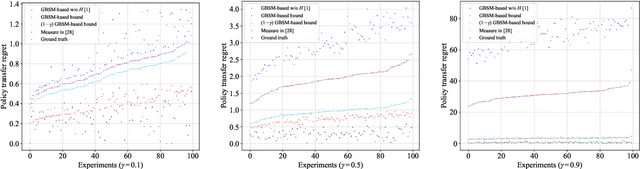

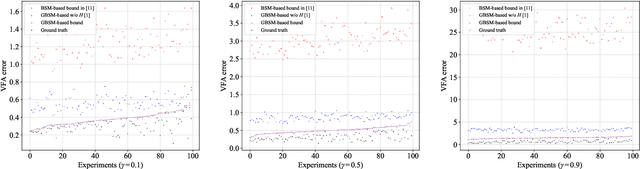

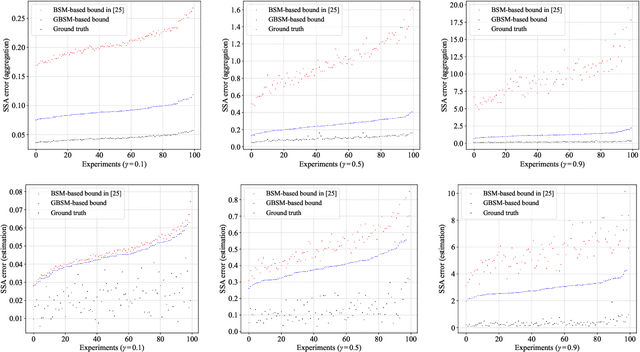

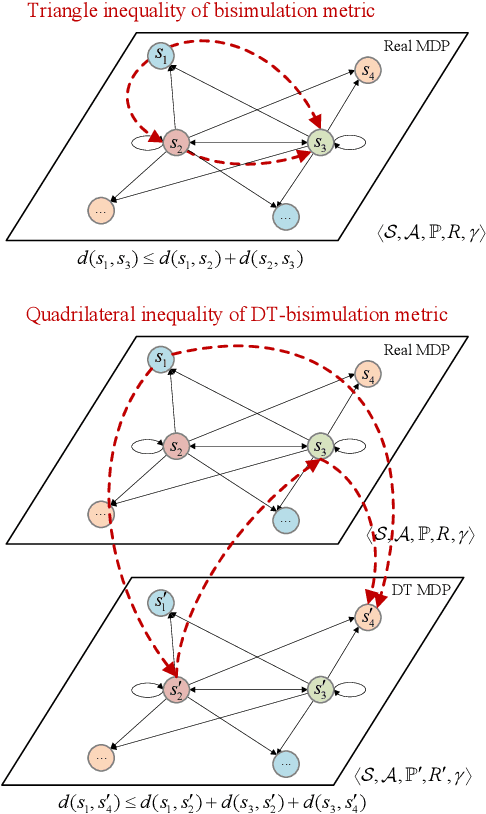

Abstract:The bisimulation metric (BSM) is a powerful tool for analyzing state similarities within a Markov decision process (MDP), revealing that states closer in BSM have more similar optimal value functions. While BSM has been successfully utilized in reinforcement learning (RL) for tasks like state representation learning and policy exploration, its application to state similarity between multiple MDPs remains challenging. Prior work has attempted to extend BSM to pairs of MDPs, but a lack of well-established mathematical properties has limited further theoretical analysis between MDPs. In this work, we formally establish a generalized bisimulation metric (GBSM) for measuring state similarity between arbitrary pairs of MDPs, which is rigorously proven with three fundamental metric properties, i.e., GBSM symmetry, inter-MDP triangle inequality, and a distance bound on identical spaces. Leveraging these properties, we theoretically analyze policy transfer, state aggregation, and sampling-based estimation across MDPs, obtaining explicit bounds that are strictly tighter than existing ones derived from the standard BSM. Additionally, GBSM provides a closed-form sample complexity for estimation, improving upon existing asymptotic results based on BSM. Numerical results validate our theoretical findings and demonstrate the effectiveness of GBSM in multi-MDP scenarios.

Provable Performance Bounds for Digital Twin-driven Deep Reinforcement Learning in Wireless Networks: A Novel Digital-Twin Bisimulation Metric

Feb 25, 2025

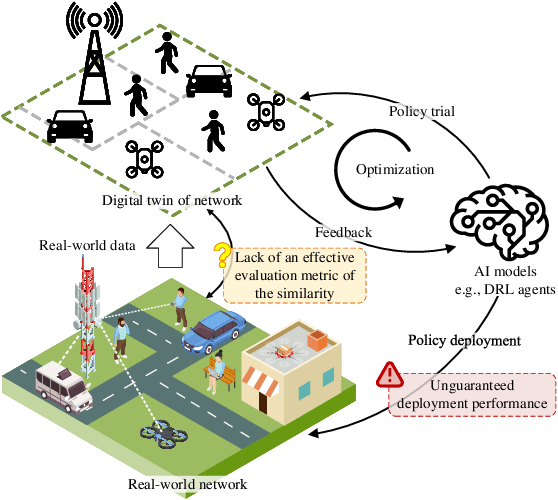

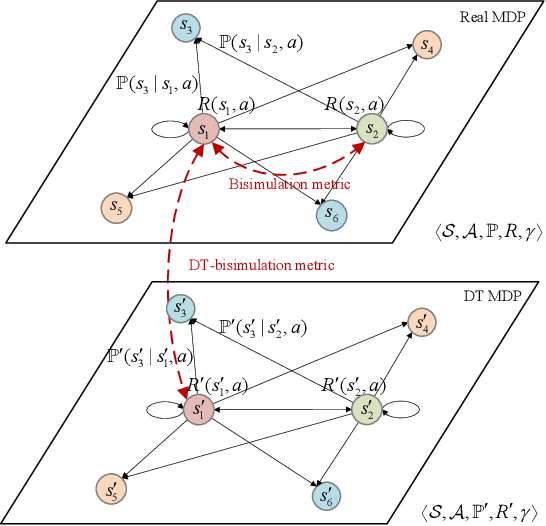

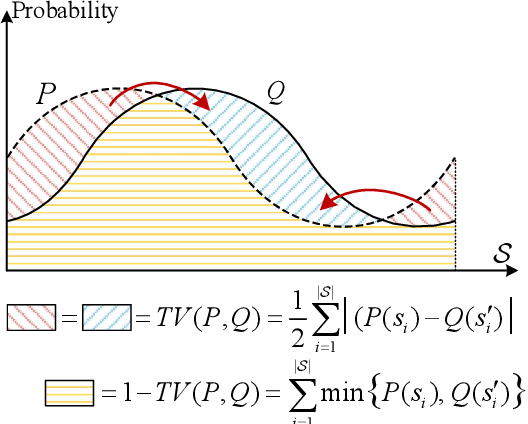

Abstract:Digital twin (DT)-driven deep reinforcement learning (DRL) has emerged as a promising paradigm for wireless network optimization, offering safe and efficient training environment for policy exploration. However, in theory existing methods cannot always guarantee real-world performance of DT-trained policies before actual deployment, due to the absence of a universal metric for assessing DT's ability to support reliable DRL training transferrable to physical networks. In this paper, we propose the DT bisimulation metric (DT-BSM), a novel metric based on the Wasserstein distance, to quantify the discrepancy between Markov decision processes (MDPs) in both the DT and the corresponding real-world wireless network environment. We prove that for any DT-trained policy, the sub-optimality of its performance (regret) in the real-world deployment is bounded by a weighted sum of the DT-BSM and its sub-optimality within the MDP in the DT. Then, a modified DT-BSM based on the total variation distance is also introduced to avoid the prohibitive calculation complexity of Wasserstein distance for large-scale wireless network scenarios. Further, to tackle the challenge of obtaining accurate transition probabilities of the MDP in real world for the DT-BSM calculation, we propose an empirical DT-BSM method based on statistical sampling. We prove that the empirical DT-BSM always converges to the desired theoretical one, and quantitatively establish the relationship between the required sample size and the target level of approximation accuracy. Numerical experiments validate this first theoretical finding on the provable and calculable performance bounds for DT-driven DRL.

Parallel Digital Twin-driven Deep Reinforcement Learning for User Association and Load Balancing in Dynamic Wireless Networks

Oct 10, 2024

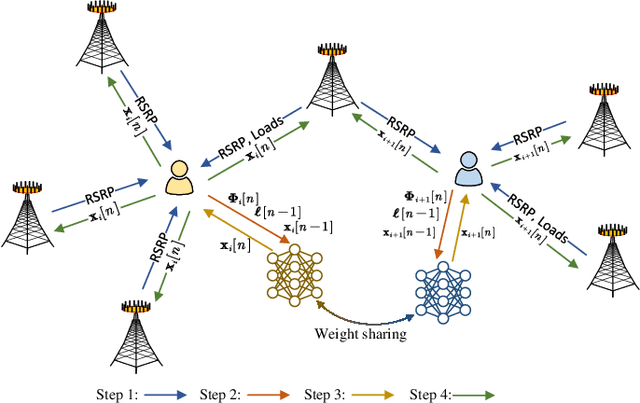

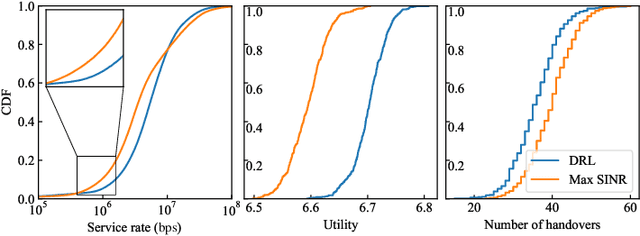

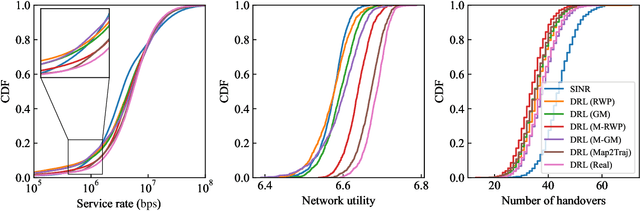

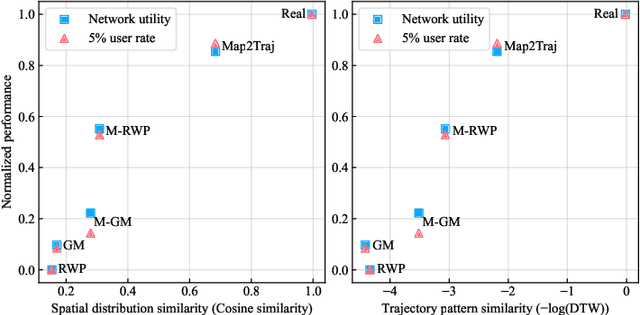

Abstract:Optimization of user association in a densely deployed heterogeneous cellular network is usually challenging and even more complicated due to the dynamic nature of user mobility and fluctuation in user counts. While deep reinforcement learning (DRL) emerges as a promising solution, its application in practice is hindered by high trial-and-error costs in real world and unsatisfactory physical network performance during training. In addition, existing DRL-based user association methods are usually only applicable to scenarios with a fixed number of users due to convergence and compatibility challenges. In this paper, we propose a parallel digital twin (DT)-driven DRL method for user association and load balancing in networks with both dynamic user counts, distribution, and mobility patterns. Our method employs a distributed DRL strategy to handle varying user numbers and exploits a refined neural network structure for faster convergence. To address these DRL training-related challenges, we devise a high-fidelity DT construction technique, featuring a zero-shot generative user mobility model, named Map2Traj, based on a diffusion model. Map2Traj estimates user trajectory patterns and spatial distributions solely from street maps. Armed with this DT environment, DRL agents are enabled to be trained without the need for interactions with the physical network. To enhance the generalization ability of DRL models for dynamic scenarios, a parallel DT framework is further established to alleviate strong correlation and non-stationarity in single-environment training and improve the training efficiency. Numerical results show that the proposed parallel DT-driven DRL method achieves closely comparable performance to real environment training, and even outperforms those trained in a single real-world environment with nearly 20% gain in terms of cell-edge user performance.

Map2Traj: Street Map Piloted Zero-shot Trajectory Generation with Diffusion Model

Jul 29, 2024

Abstract:User mobility modeling serves a crucial role in analysis and optimization of contemporary wireless networks. Typical stochastic mobility models, e.g., random waypoint model and Gauss Markov model, can hardly capture the distribution characteristics of users within real-world areas. State-of-the-art trace-based mobility models and existing learning-based trajectory generation methods, however, are frequently constrained by the inaccessibility of substantial real trajectories due to privacy concerns. In this paper, we harness the intrinsic correlation between street maps and trajectories and develop a novel zero-shot trajectory generation method, named Map2Traj, by exploiting the diffusion model. We incorporate street maps as a condition to consistently pilot the denoising process and train our model on diverse sets of real trajectories from various regions in Xi'an, China, and their corresponding street maps. With solely the street map of an unobserved area, Map2Traj generates synthetic trajectories that not only closely resemble the real-world mobility pattern but also offer comparable efficacy. Extensive experiments validate the efficacy of our proposed method on zero-shot trajectory generation tasks in terms of both trajectory and distribution similarities. In addition, a case study of employing Map2Traj in wireless network optimization is presented to validate its efficacy for downstream applications.

Wireless Network Digital Twin for 6G: Generative AI as A Key Enabler

Nov 29, 2023

Abstract:Digital twin, which enables emulation, evaluation, and optimization of physical entities through synchronized digital replicas, has gained increasingly attention as a promising technology for intricate wireless networks. For 6G, numerous innovative wireless technologies and network architectures have posed new challenges in establishing wireless network digital twins. To tackle these challenges, artificial intelligence (AI), particularly the flourishing generative AI, emerges as a potential solution. In this article, we discuss emerging prerequisites for wireless network digital twins considering the complicated network architecture, tremendous network scale, extensive coverage, and diversified application scenarios in the 6G era. We further explore the applications of generative AI, such as transformer and diffusion model, to empower the 6G digital twin from multiple perspectives including implementation, physical-digital synchronization, and slicing capability. Subsequently, we propose a hierarchical generative AI-enabled wireless network digital twin at both the message-level and policy-level, and provide a typical use case with numerical results to validate the effectiveness and efficiency. Finally, open research issues for wireless network digital twins in the 6G era are discussed.

Digital Twin Assisted Deep Reinforcement Learning for Online Optimization of Network Slicing Admission Control

Oct 07, 2023Abstract:The proliferation of diverse network services in 5G and beyond networks has led to the emergence of network slicing technologies. Among these, admission control plays a crucial role in achieving specific optimization goals through the selective acceptance of service requests. Although Deep Reinforcement Learning (DRL) forms the foundation in many admission control approaches for its effectiveness and flexibility, the initial instability of DRL models hinders their practical deployment in real-world networks. In this work, we propose a digital twin (DT) assisted DRL solution to address this issue. Specifically, we first formulate the admission decision-making process as a semi-Markov decision process, which is subsequently simplified into an equivalent discrete-time Markov decision process to facilitate the implementation of DRL methods. The DT is established through supervised learning and employed to assist the training phase of the DRL model. Extensive simulations show that the DT-assisted DRL model increased resource utilization by over 40\% compared to the directly trained state-of-the-art Dueling-DQN and over 20\% compared to our directly trained DRL model during initial training. This improvement is achieved while preserving the model's capacity to optimize the long-term rewards.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge