Zhaoxiang Zang

Training Auto-encoders Effectively via Eliminating Task-irrelevant Input Variables

May 31, 2016

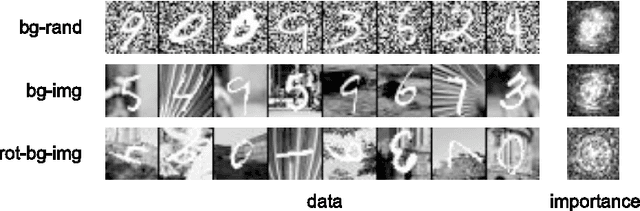

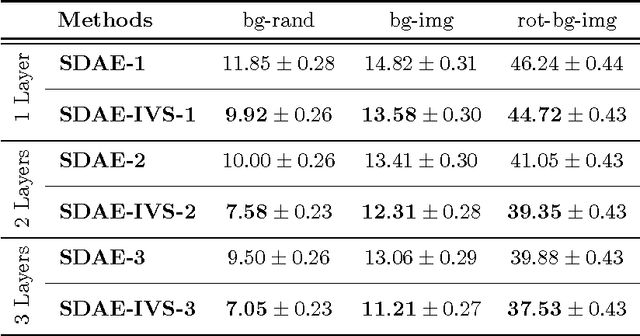

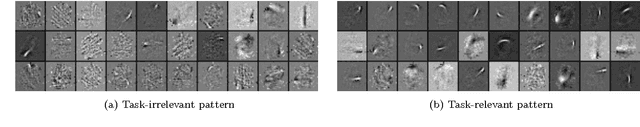

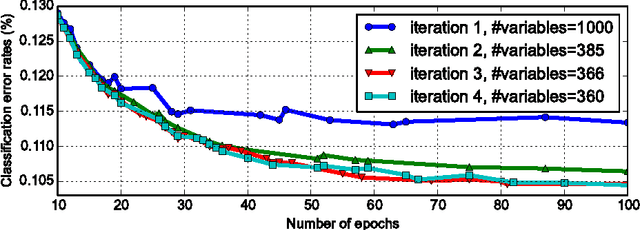

Abstract:Auto-encoders are often used as building blocks of deep network classifier to learn feature extractors, but task-irrelevant information in the input data may lead to bad extractors and result in poor generalization performance of the network. In this paper,via dropping the task-irrelevant input variables the performance of auto-encoders can be obviously improved .Specifically, an importance-based variable selection method is proposed to aim at finding the task-irrelevant input variables and dropping them.It firstly estimates importance of each variable,and then drops the variables with importance value lower than a threshold. In order to obtain better performance, the method can be employed for each layer of stacked auto-encoders. Experimental results show that when combined with our method the stacked denoising auto-encoders achieves significantly improved performance on three challenging datasets.

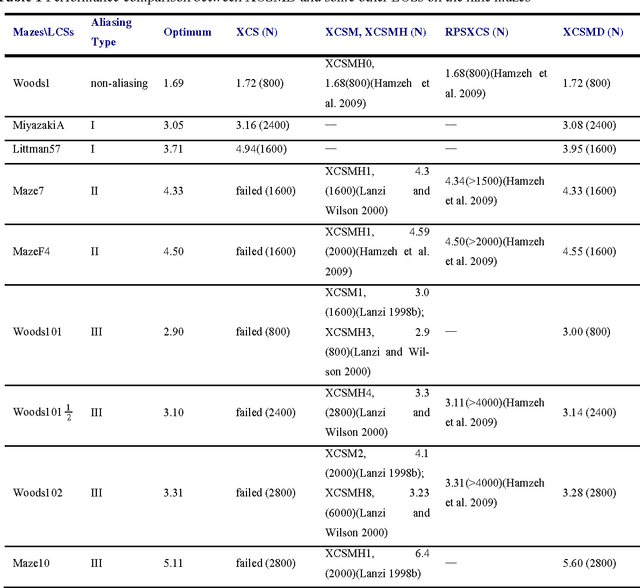

Tournament selection in zeroth-level classifier systems based on average reward reinforcement learning

Apr 26, 2016

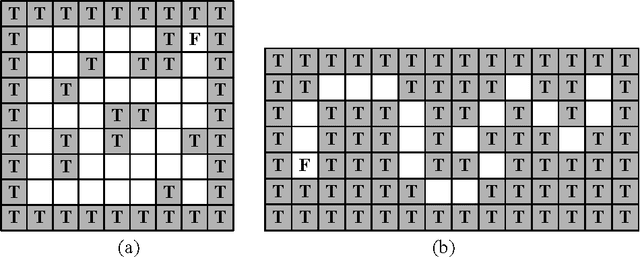

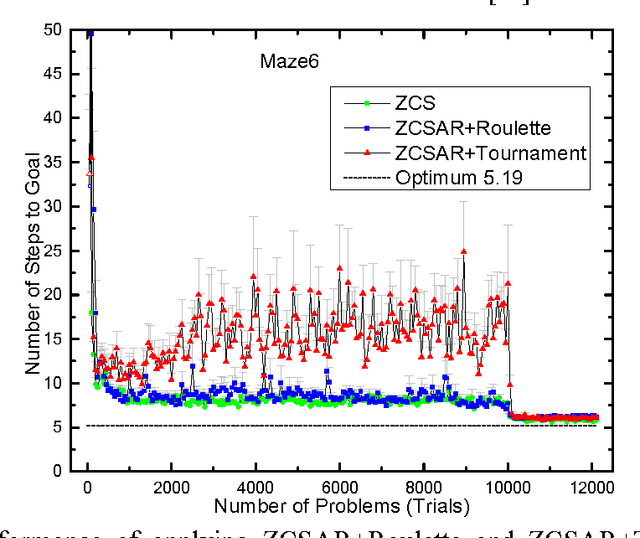

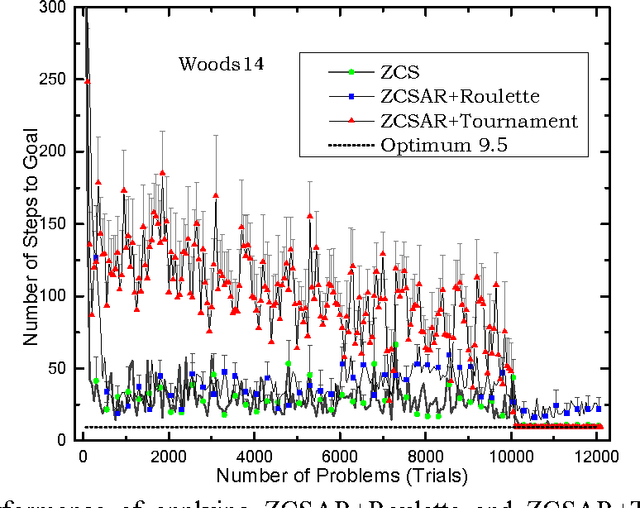

Abstract:As a genetics-based machine learning technique, zeroth-level classifier system (ZCS) is based on a discounted reward reinforcement learning algorithm, bucket-brigade algorithm, which optimizes the discounted total reward received by an agent but is not suitable for all multi-step problems, especially large-size ones. There are some undiscounted reinforcement learning methods available, such as R-learning, which optimize the average reward per time step. In this paper, R-learning is used as the reinforcement learning employed by ZCS, to replace its discounted reward reinforcement learning approach, and tournament selection is used to replace roulette wheel selection in ZCS. The modification results in classifier systems that can support long action chains, and thus is able to solve large multi-step problems.

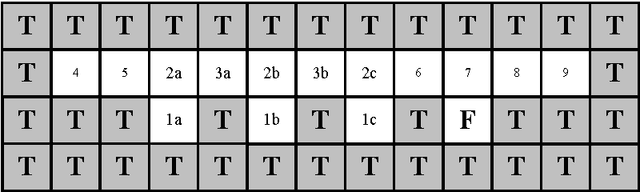

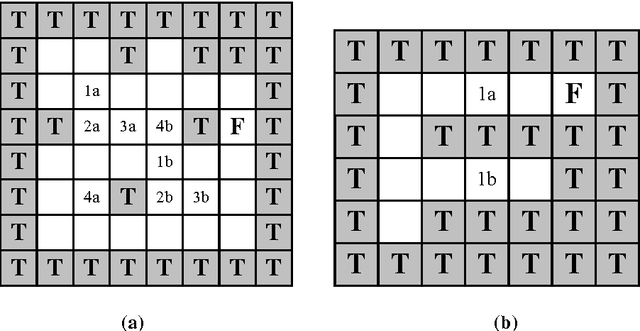

Learning classifier systems with memory condition to solve non-Markov problems

Nov 02, 2012

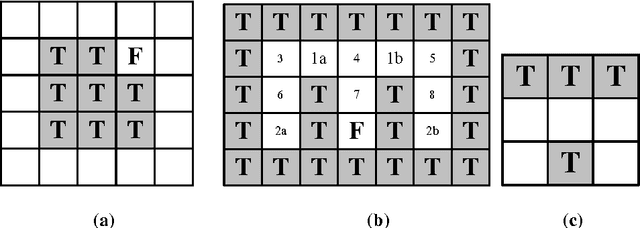

Abstract:In the family of Learning Classifier Systems, the classifier system XCS has been successfully used for many applications. However, the standard XCS has no memory mechanism and can only learn optimal policy in Markov environments, where the optimal action is determined solely by the state of current sensory input. In practice, most environments are partially observable environments on agent's sensation, which are also known as non-Markov environments. Within these environments, XCS either fails, or only develops a suboptimal policy, since it has no memory. In this work, we develop a new classifier system based on XCS to tackle this problem. It adds an internal message list to XCS as the memory list to record input sensation history, and extends a small number of classifiers with memory conditions. The classifier's memory condition, as a foothold to disambiguate non-Markov states, is used to sense a specified element in the memory list. Besides, a detection method is employed to recognize non-Markov states in environments, to avoid these states controlling over classifiers' memory conditions. Furthermore, four sets of different complex maze environments have been tested by the proposed method. Experimental results show that our system is one of the best techniques to solve partially observable environments, compared with some well-known classifier systems proposed for these environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge